Monzo has grown a lot over the last 8 years, bringing with it a rapid expansion of our data warehouse and a host of challenges to tackle. Today’s blog post is an overview of how we leverage incremental modelling, a data transformation technique which helps us scale sustainably as we blast off to 10 million customers 🚀

Before diving in, a whistle-stop tour of Monzo’s data set-up (though definitely read our in-depth blog post on the topic!):

Data transformation via dbt sits at the heart of our data stack, and we maintain our own extended version of the tool with custom-built functionality.

The majority of data landing in our BigQuery data warehouse arrives via analytics events emitted from the >3000 microservices which power Monzo.

We ingest >3 billion events on an average day, and this number is only going up!

🤔 What is incremental modelling?

Incremental modelling is an example of one of dbt’s materialisation strategies - ways in which dbt translates data models written in SQL into persisted tables or views in the data warehouse. Using an incremental materialisation strategy involves only transforming new data each time dbt is run, instead of constantly rebuilding entire tables from scratch.

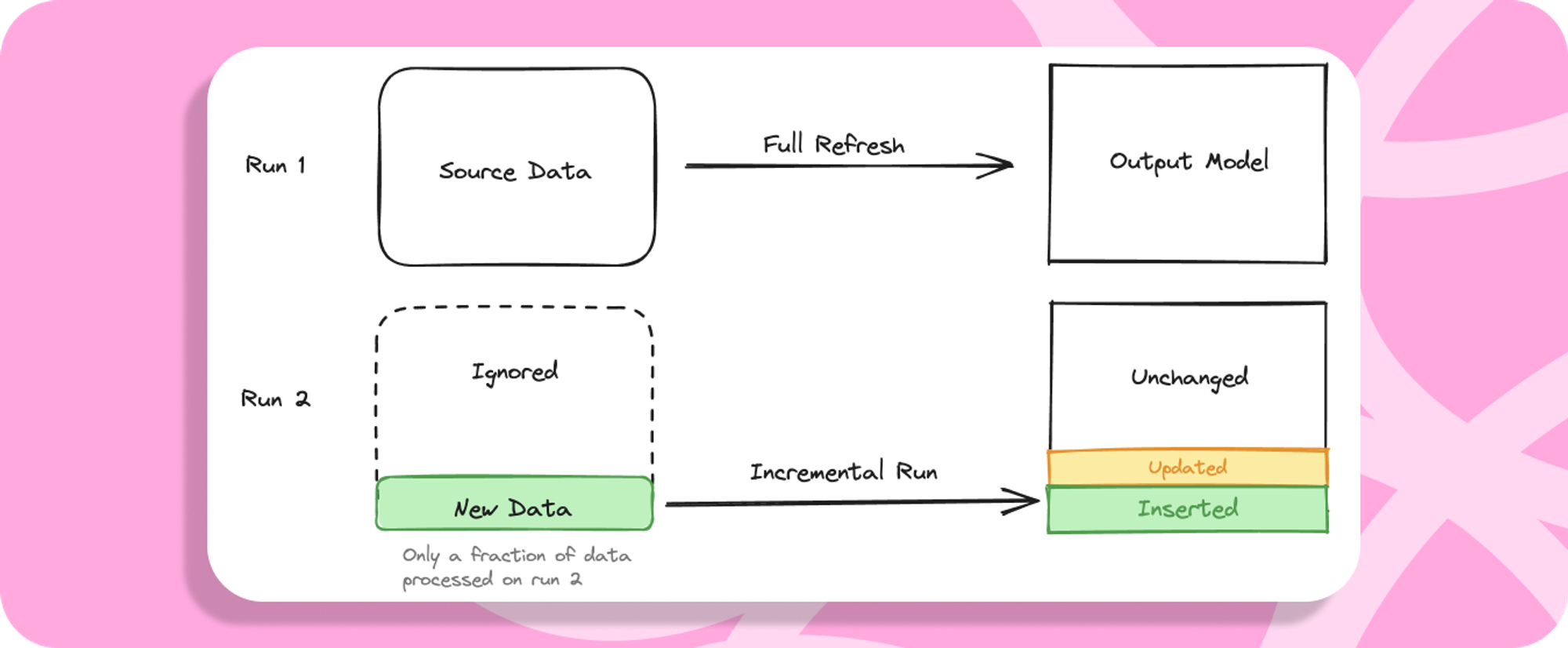

Incremental dbt models can run in two different ways depending on the context:

A full refresh, where all upstream data is processed to generate the target table. A full refresh will run when an incremental model is built for the very first time, or can be triggered explicitly at any point.

An incremental run, where only new data is transformed and the rest is ignored. To get the benefits of incremental modelling, this should be the behaviour for the majority of runs.

Incremental runs process significantly less data than full refreshes

🚀 How incremental modelling helps us scale

In the ever-growing data landscape at Monzo, there are two major reasons why incremental modelling is so important to us:

Improved landing times - It’s critical that the whole business can make timely strategic decisions and consistently work with fresh data, which means we strive for models to land as early as possible in the day. Incremental runs typically take 80% less time compared to full refreshes, so they are a huge part of ensuring our data pipeline runs quickly.

Decreased costs - As a rapidly scaling company, it’s important we don’t fall into the trap of allowing our infrastructure costs to spiral. Our incremental models typically cost 60% less to run in BigQuery, so incrementalising our most expensive models is one of the biggest levers we have for pipeline cost reduction.

These performance benefits are critical to the scalability of our data warehouse, allowing us to maintain a robust data pipeline that can handle Monzo’s growth without degrading over time 💪

🧑💻 Embedding incremental modelling into our ways of working

Despite the clear performance benefits of incremental modelling, ensuring its widespread usage is a challenge because building incremental models can be difficult. As a materialisation strategy it is more complex than standard tables or views, because it requires extra inputs:

Filtering logic for selecting the new data to process - which can get particularly complex if joining together multiple upstream sources in a single model. This filtering logic also needs to be wrapped in jinja - a Python-like templating language supported by dbt - to ensure the filter is only applied during an incremental run.

Configuration for how the new data should be handled - e.g. should it be merged into the target model - allowing existing rows to be ‘updated’ - or just appended on to the end?

At Monzo our goal is to make incremental modelling straightforward to understand and easy to implement by everyone in our data team, so we have a range of tools and processes in place to help achieve that.

Automatic code generation

Where possible, we avoid having to do too much heavy lifting for incremental modelling by leveraging repeatable code. One example of this is through automatic model generation, taking advantage of the fact that analytics events which land in our warehouse all have the same basic structure. We transform these source tables into the first layer of models in our dbt project with a single command line function created by our in-house engineers:

new-base-event events_schema.flashy_new_event

This automatically generates a model file called base_flashy_new_event.sql, complete with basic transformation and fully-functioning incremental logic which ensures only new events are processed and appended each time dbt runs. This automatic code generation ensures the foundation of our warehouse is incremental and we consistently handle new sources of events in a scalable way with minimal added effort.

Advanced incremental modelling with custom materialisations

In the ‘deeper’ layers of our dbt project, working with more sophisticated table structures such as aggregated facts, dimensional snapshots or slowly-changing dimensions, modelling incrementally poses additional challenges. The approach is typically not as straightforward as the ‘append’ strategy described above for raw events, and often involves smarter filtering logic to identify what rows need to be updated in the target table.

Despite the added complexity of these models, we still strive to abstract away the fiddly aspects of incremental builds so people can focus their energy on value-adding code. Our centralised analytics engineering team - affectionately known as ‘the hub’ - have built a number of custom materialisations to enable this. These materialisations, combined with a small amount of additional config to dbt files, are able to generate a range of different table structures, all of which are fully incremental.

For example, one such in-house materialisation is called episodes_incremental, which generates models with a structure similar to a type 2 slowly changing dimension. With a few lines of config describing the primary key of the entity being modelled and the relevant timestamp columns in the contributing tables, we can seamlessly generate a fully incremental model of this type, complete with the ability to gracefully handle late-arriving data - a topic discussed in more detail later in this post.

Internal data health reporting

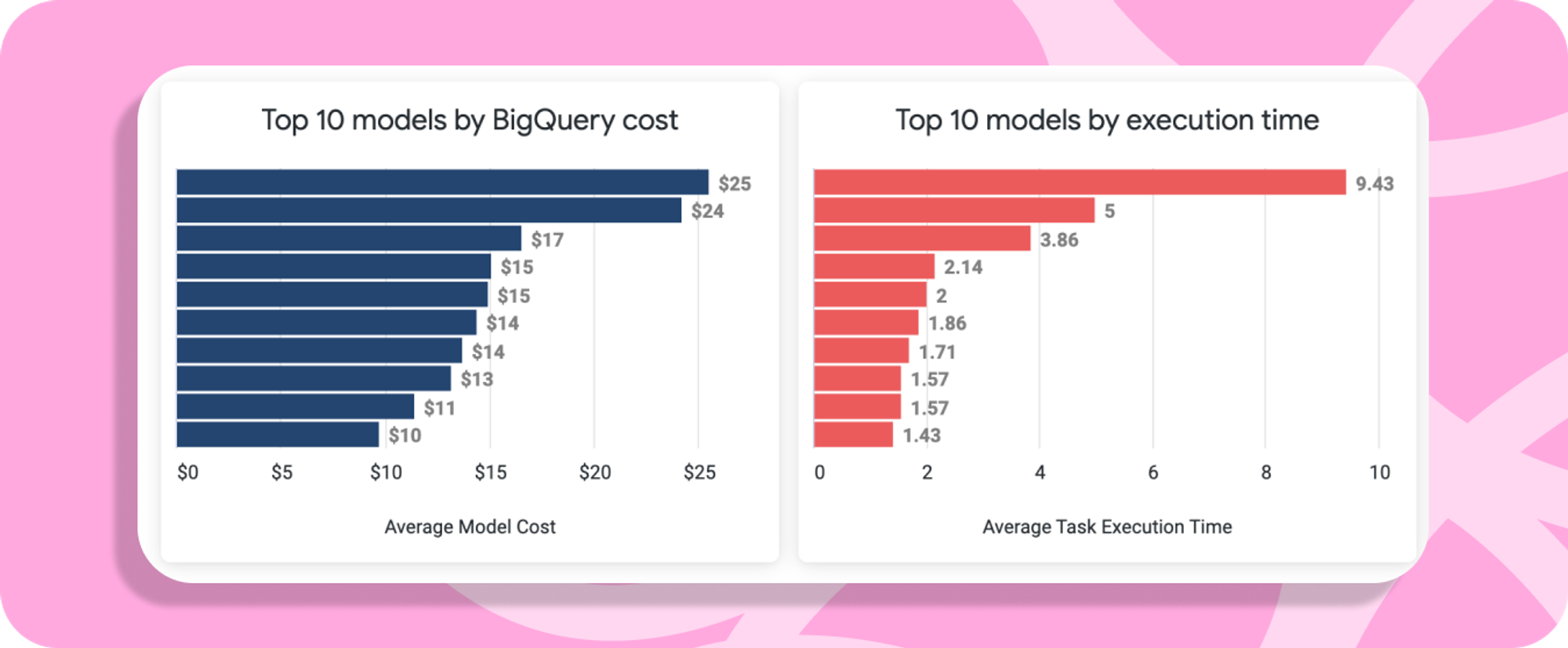

Another way we encourage incremental modelling is by empowering teams to identify models they own which would benefit most from being incrementalised. A wide range of metadata about our model builds, query runtimes and BigQuery costs are ingested into our warehouse and surfaced in Looker, to help teams decide what they should be looking to optimise. This internal visibility is critical to identifying inefficiencies in our pipeline, and fosters a culture of continuous iterative improvement within our warehouse.

An example of the type of reporting available to all of our data team, helping to identify good candidates for incremental modelling

Training and documentation

At Monzo, we pride ourselves on hiring people from a range of professional backgrounds and we operate with a strong culture of shared ownership over our data warehouse. We want everyone in our data team to feel empowered to contribute to its improvement, and we know that lots of new joiners will have never built an incremental model or worked with dbt before. To ensure everyone feels comfortable with these methods, we provide a comprehensive onboarding for all our data new-joiners, from the basics of dbt right through to incremental modelling, jinja and macro creation.

We’re also passionate about documentation, maintaining extensive guidance on incremental modelling and other warehouse optimisations which is constantly evolving as new methods emerge.

🦺 Safeguarding our pipeline integrity

Whilst we use all the tools described above to make incremental modelling as straightforward as possible, we also recognise that working with incremental models has intrinsic risks and challenges, which we look to proactively address.

Blue-green deployment and reconciliation checks

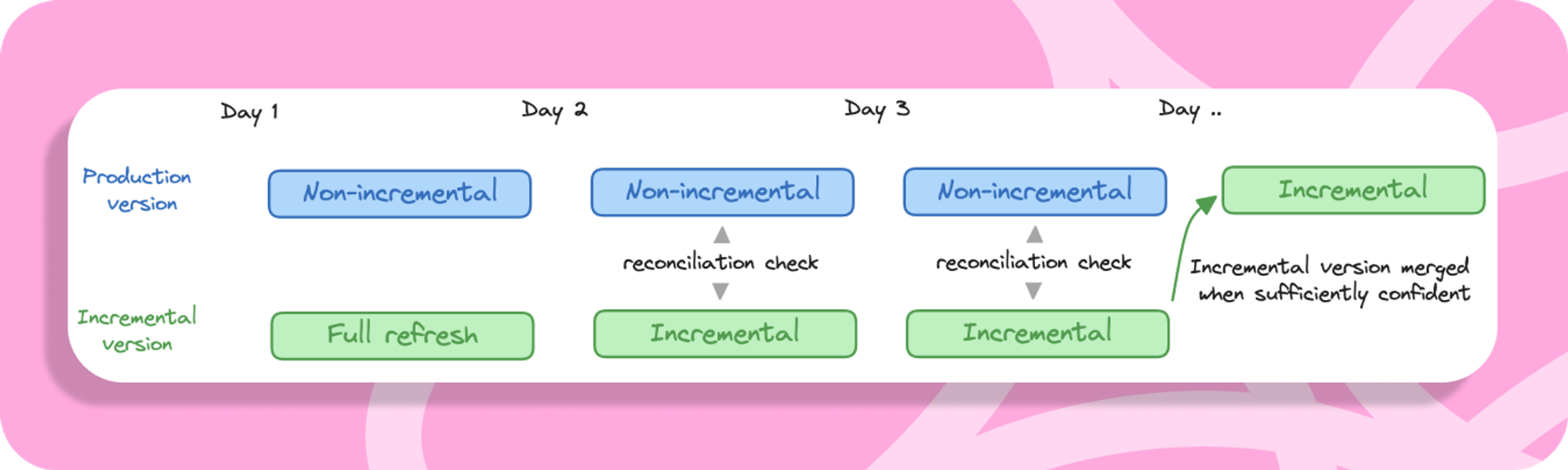

When developing incremental models, an important check is that the incremental version of a model generates identical results to its non-incremental equivalent. It can be challenging to determine this through basic development workflows, particularly with complex models which may have many upstreams.

During such cases, we often run a form of blue-green deployment, maintaining two parallel versions of the same model and checking that they consistently remain identical. Once we build sufficient confidence that they are equivalent, we remove the non-incremental version and reap the rewards of the incremental build.

A blue-green deployment allows us to build confidence in releasing incremental models

These workflows are supported by reconciliation scripts, which can granularly compare the results of two different models and check that models are wholly identical.

Remedying model drift through scheduled full refreshes

Even with the reconciliation described above, because incremental models rely on identifying ‘new data’, it can be vulnerable to late-arriving or out-of-sequence data. With Monzo’s event-driven architecture, we can’t guarantee that all events are delivered perfectly on time or in a specific order, so are particularly at risk of this. We shield ourselves against this by leveraging lookback windows - pulling back not just totally new data, but also a buffer of extra time in the past. However, sometimes events still fall outside of these windows, and we run into model ‘drift’. Drift is where, over time, the incremental version of a model starts to increasingly differ from a non-incremental version with the same logic.

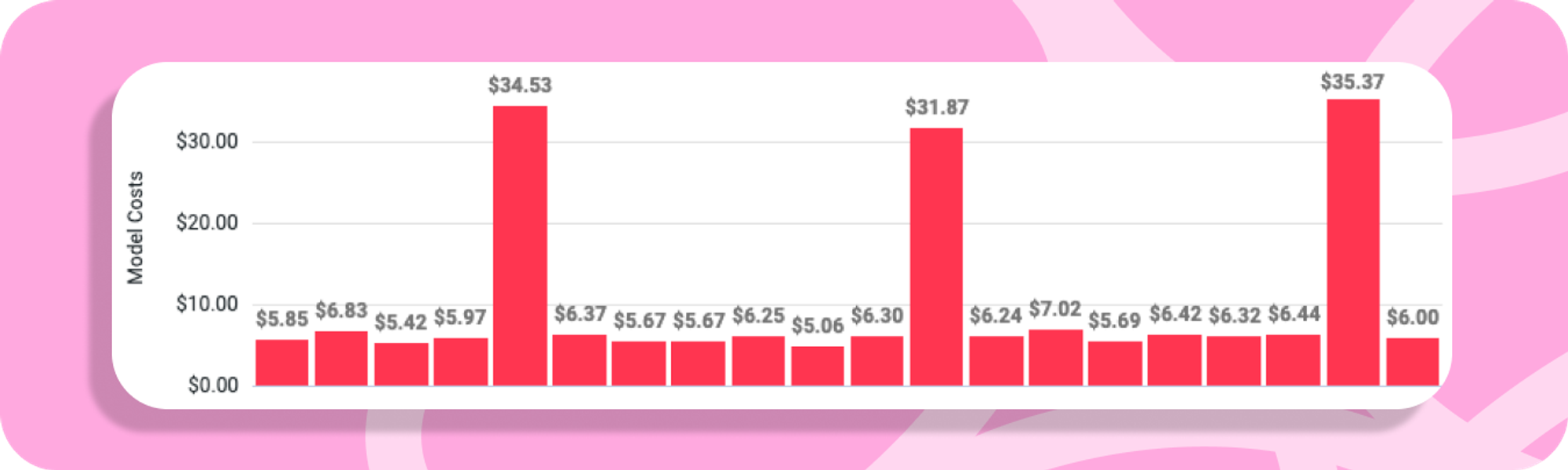

We correct for this with another in-house customisation, making use of optional config in our incremental models to periodically trigger a full refresh. We typically schedule these for the weekend, when there is less overall pipeline activity so we have more resource available for intensive jobs. These scheduled full refreshes bring the underlying model back ‘in sync’ and course-corrects from any drift that may have occurred since the last full refresh. This does mean we periodically have to spend extra on an otherwise inexpensive model - but we believe these costs are worth it for the improved reliability of our pipeline.

An example of a model subject to scheduled full refreshes. Note how much cheaper the incremental runs are, compared to the weekly full refresh.

Preventing pipeline incidents through automatic full refreshes

Incremental modelling also introduces new ways for model runs to fail. Because it works by trying to update or insert into an existing table, if code changes are introduced that cause an incompatibility between the incoming data and the target table, a run failure can occur. For example, let’s assume one of our team updates a column from a string to an integer, but doesn’t fully refresh the model. When dbt attempts to insert the new incremental data into the existing model, there will be a clash of data types and the run will fail.

To prevent this causing a pipeline incident that blocks all downstream models that are scheduled after the failing one, we have customised our incremental materialisation logic such that it automatically checks if a run will fail and defaults back to a full refresh. This ensures that we can safely make changes to incremental models without having to remember to manually fully refresh - our extended dbt framework will automatically recognise when it’s required and prevent a pipeline failure.

🗻 Some challenges still to tackle

As you can see, we have lots of tooling and processes within Monzo to support incremental modelling. But there still remains a host of challenges we haven’t fully resolved, and tonnes of opportunity for us to improve our workflows further, such as:

Seamlessly propagating the effect of code changes - When we make a logic change to a table upstream of incremental models, how can we ensure the impact of the change gets propagated throughout the full lineage? Can we be smarter than just fully refreshing everything downstream?

Preventing accidental time bombs - Although we want the majority of our incremental model runs to be incremental, it’s important that all - except our very largest - data models can be fully refreshed without timing out. How can we proactively identify if an incremental model is becoming so large that it will no longer be able to be fully refreshed, so we can intervene before a failure?

⛳️ Conclusion

Hopefully you’ve enjoyed this short glimpse into what working on the data team at Monzo is like, and some of the exciting tools and strategies we get to work with on a daily basis. Incremental modelling is just one of many ways we strive to optimise our modelling practices and build a best-in-class data warehouse 🚀

If you work in data and are interested in joining the team - we are hiring! We’re always looking for talented individuals passionate about good data practices, so get in touch if you like the sound of the way we work - or have ideas to help us be better 👀