Our customers reasonably expect to be able to spend on their card, make bank transfers and pay their bills 24 hours a day, 365 days a year. Their lives don’t have downtime for maintenance so nor should we. We dedicate a lot of our engineering effort to minimise the risk of downtime during technical migrations and other day-to-day operations, but unforeseen incidents that cause unexpected outages are impossible to eliminate entirely.

We take reliability seriously at Monzo so we built a completely separate backup banking infrastructure called Monzo Stand-in to add another layer of defence so customers can continue to use important services provided by us. We consider Monzo Stand-in to be a backup of last resort, not our primary mechanism of providing a reliable service to our customers, by providing us with an extra line of defence.

Monzo Stand-in Architecture

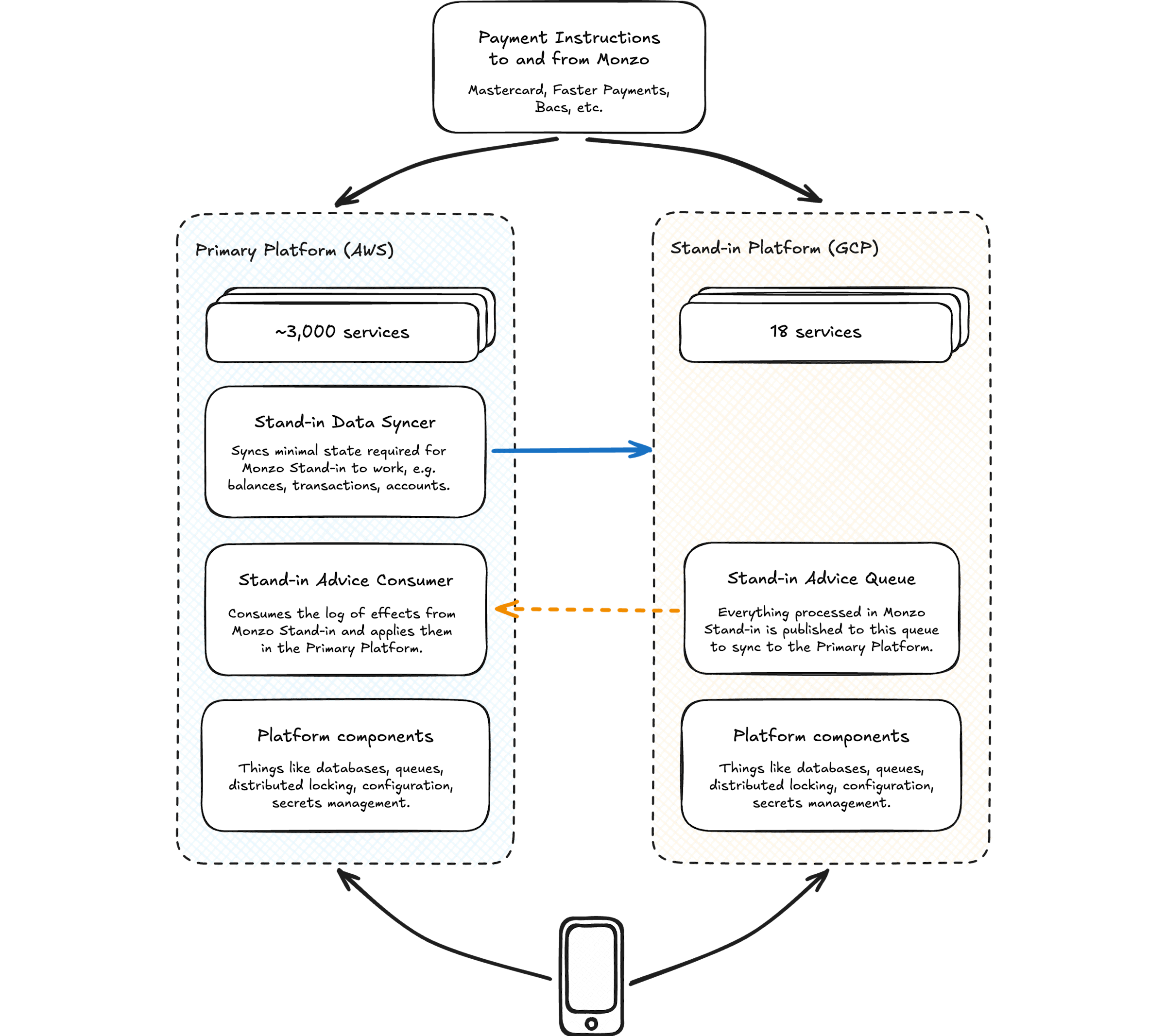

Monzo Stand-in is an independent set of systems that run on Google Cloud Platform (GCP) and is able to take over from our Primary Platform, which runs in Amazon Web Services (AWS), in the event of a major incident. It supports the most important features of Monzo like spending on cards, withdrawing cash, sending and receiving bank transfers, checking account balances and transactions, and freezing or unfreezing cards.

Our Primary and Stand-in Platforms run independently to one another, each consisting of Kubernetes clusters running a unique set of services on top of typical platform components such as a database, queueing systems and locking mechanisms. The services running in each platform are unique in the sense that services in the Stand-in Platform don’t ever run in the Primary Platform, or vice-versa, even for behaviours that are common across both like processing a card payment.

Each platform is able to make their own decisions about approving or declining transactions and can establish their own connections to payment networks via multiple physical data centres.

Monzo Stand-in also runs a limited set of API endpoints tailored to serve limited functionality while we’re using Stand-in. The Monzo App checks whether Monzo Stand-in is enabled periodically in the background, and if it is, it flips to a simplified UI that supports our most important features.

Different systems help to mitigate risk

It might seem strange to build brand new services from the ground up for Monzo Stand-in rather than deploy the same services we run on the Primary Platform, but there are a number of motivations for us taking this approach.

If we tried to run the exact same set of services we would need to replicate all of our data between the two platforms. To do this well we’d have to maintain strong consistency of our data. This would mean that writes to our database would be considered successful only if the data is written to both platforms. If either platform became unavailable we’d be unable to write anything without sacrificing consistency, reducing our overall availability rather than improving it.

Instead of maintaining strong data consistency we accept that the replication is non-blocking and that it is eventually consistent, but systems like our ledger wouldn’t be able to tolerate eventually consistent data.

Different software reduces the chance of suffering same failure

Our Primary Platform operates across AWS availability zones and it runs multiple replicas of all our services. We design systems to be scalable and we gracefully degrade when non-critical dependencies error. Even with resiliency baked into our Primary Platform’s design, complex systems such as these can fail in surprising ways.

There are a large number of possible reasons our Primary Platform could fail. While it’s easy to consider that the risk we want to mitigate is a cloud provider outage, it’s at least as likely that a bug in our code or processes is the cause for an outage.

Traditional Disaster Recovery systems predominantly consider hardware failure, assuming the most likely risk to their platform is a network outage or a disk failure. Cloud platform providers like AWS, GCP, Azure and others have for the most part solved for outages caused by hardware failure, but disaster recovery hasn’t really evolved. Today it doesn’t matter how many data centres you have if you run the same software in them all.

The greater the independence between the Stand-in environment and the Primary Platform, the smaller the risk that the same issue will impact the Stand-in Platform. In our case, our Primary and Stand-in Platforms run their own independent card issuer processing code, each capable of authorizing transactions. Whilst the platforms are expected to behave in similar ways we implement them separately and aim to minimise the reliance on shared code as much as possible.

A minimal system doesn’t cost the world

We monitor the costs of running our platforms very closely. Monzo Stand-in costs around 1% of the cost of our Primary Platform to keep running in the background, and we would only expect this to increase marginally if we enabled it during a major incident. If instead we wanted to run all of the same systems and replicate all the data then the cost in terms of compute capacity and people needed to maintain it would be much larger, potentially doubling our total platform costs.

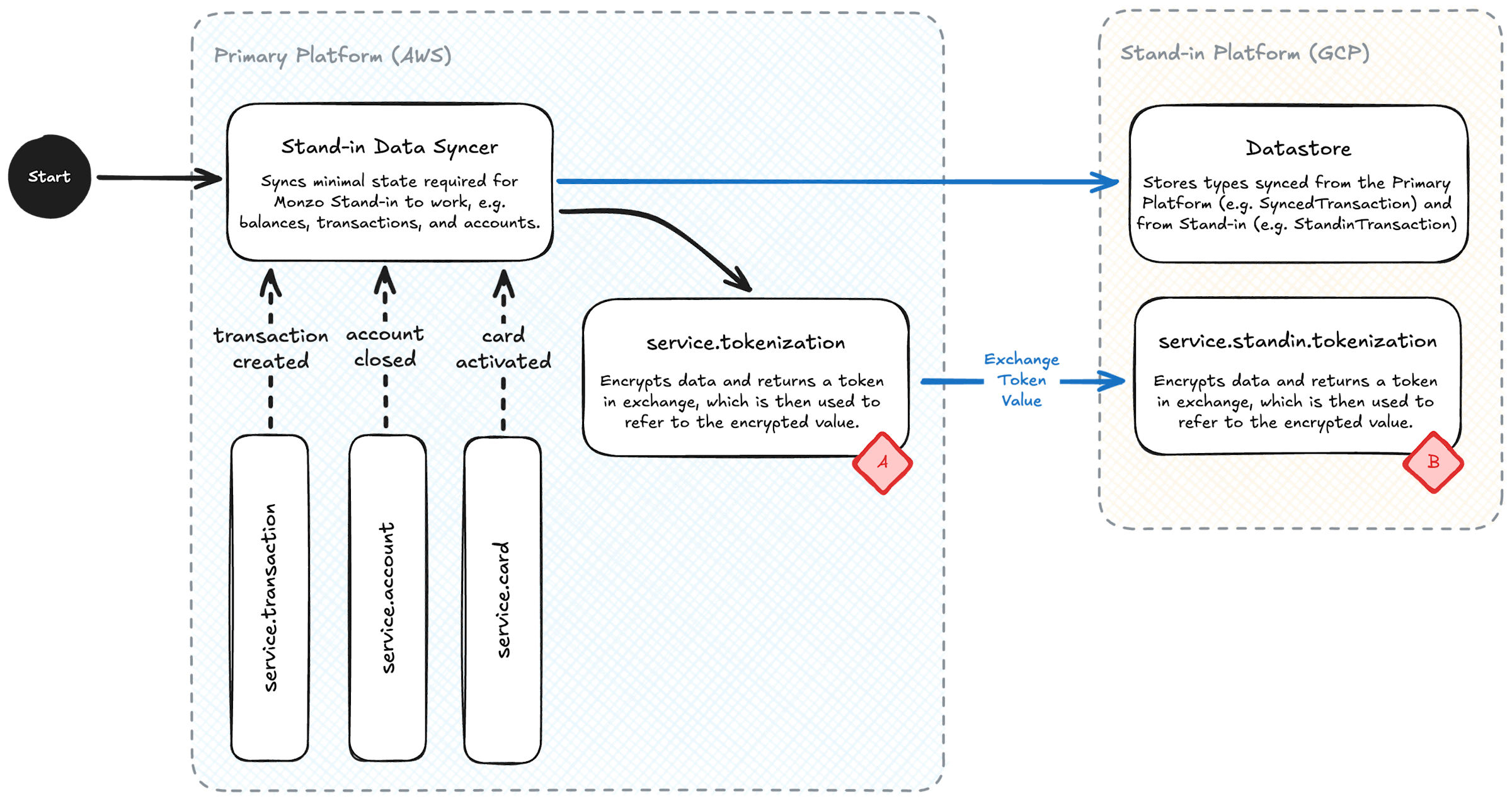

Syncing data between platforms

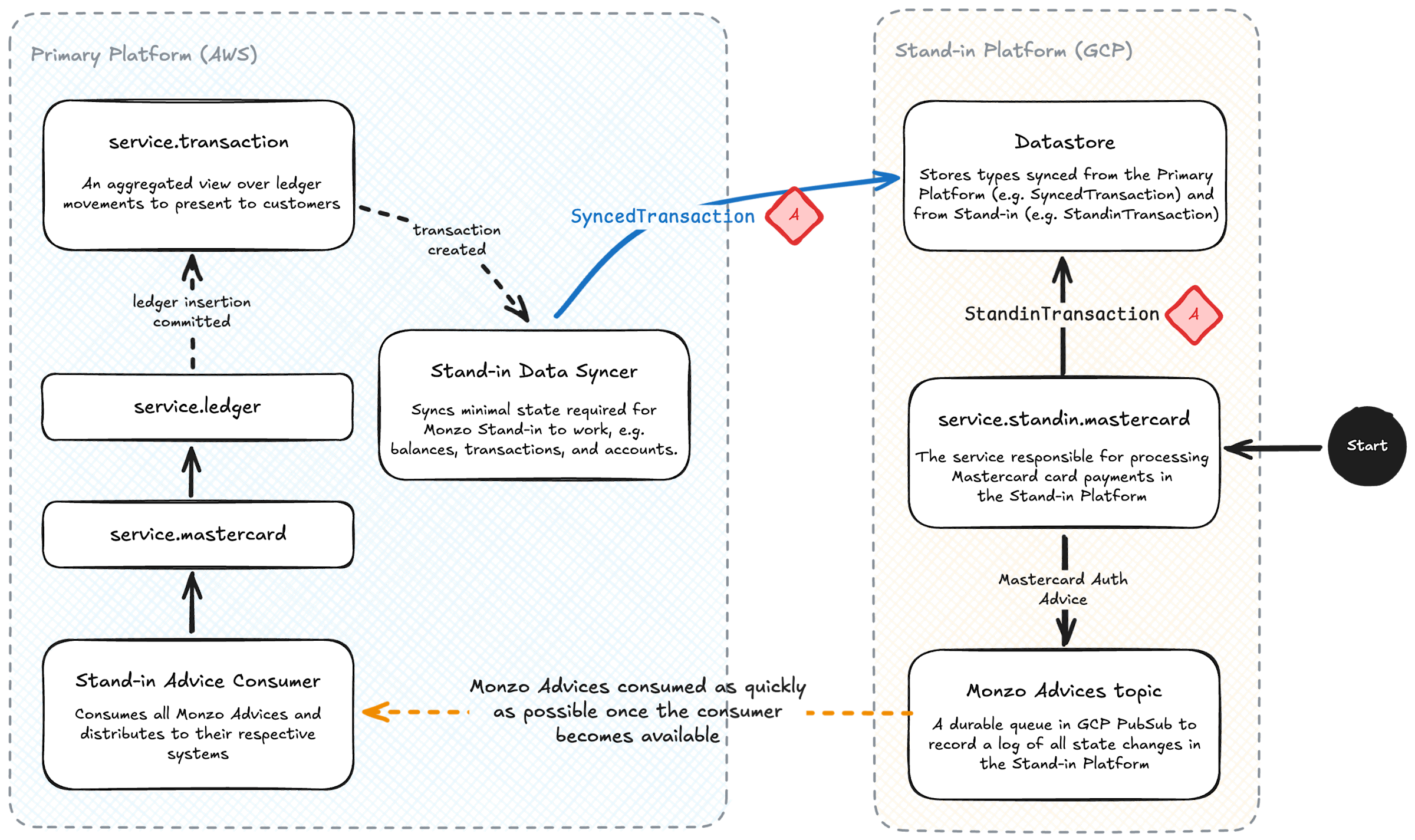

Monzo Stand-in only contains the minimal state required to support the small number of features it supports. This includes things like balance and limited historic transaction information, enough details about cards and accounts to process payments, and a list of other things like pots and payees. Whenever any of this data changes in the Primary Platform our Stand-in Data Syncer writes those updates to the Stand-in Platform. All processing outcomes, state transitions, and other effects created in the Primary Platform are published into our event system, and the Stand-in Data Syncer consumes a subset of these events to trigger the process of updating state in the Stand-in Platform.

All data we sync from the Primary to the Stand-in Platform is treated as immutable. We don’t expect the Stand-in Platform to be running on a perfectly consistent view of the world but in practice the view is extremely close to perfect consistency due to the real-time nature of the syncing. We monitor the lag of this eventually consistent syncing process very closely and alert in rare cases the lag exceeds our appetite.

Our tokenized data (e.g. card PANs that we encrypt) follows a similar but slightly different flow for syncing, where we exchange data encrypted for different keysets (labelled A and B in the diagram) between tokenization systems in each platform.

Syncing state from Monzo Stand-in

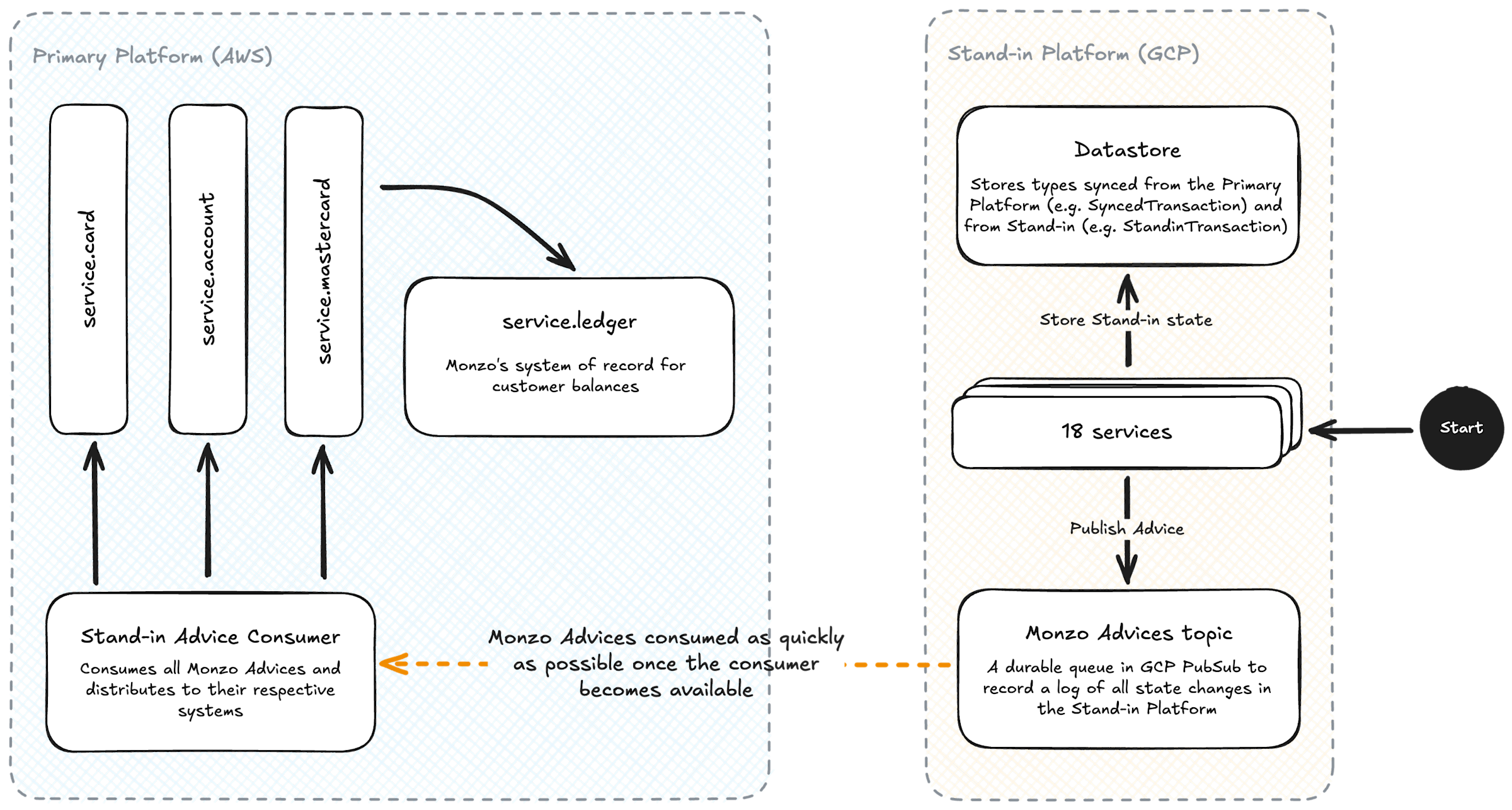

When Monzo Stand-in is enabled it produces similar processing decisions and state transitions as we do in the Primary Platform, but since this isn’t our Primary Platform, those outcomes are only authoritative for the duration that we’re operating in Stand-in.

We store new state in Monzo Stand-in separately to immutable data synced from the Primary Platform, and we record a log of all effects in a durable queue for the Primary Platform to consume when it’s able to do so. If the Primary Platform is only partially unavailable it may be able to consume this queue immediately or it may only be able to do so at a future date if we’re suffering a complete outage.

The records of effects in this log are referred to as Monzo Advices, as they each advise our Primary Platform of an effect that was created, for example a card payment that was approved, and we expect Primary Platform to apply the effects of these Advices verbatim.

The Primary Platform is our system of record, maintaining our records of truth, and at no point while Monzo Stand-in is enabled does it assume the role of our system of record. This implies that by applying Monzo Advices verbatim from a potentially inconsistent view of a customer’s balance in Monzo Stand-in we may have approved a transaction where the Primary Platform believes the customer had insufficient funds, and in this case would take that customer into an unapproved overdraft. We operate a number of controls in our Primary Platform to help with this possibility but in practice it’s extremely unlikely to occur.

Correlating data held by both platforms

When we apply a Monzo Advice from the Stand-in Platform to the Primary Platform we expect downstream systems to create an equivalent state. For example an Advice for a card payment in Stand-in will move money in the Ledger in the Primary Platform.

This creates a small problem for us. We now have state in both the Stand-in and Primary Platforms that represents the same payment, and when money is moved in the Ledger in the Primary Platform a Transaction is also synced to Stand-in by our Data Syncer as we described earlier.

To avoid double-counting Transactions and other effects in Stand-in we generate a Correlation ID (labelled A in the diagram) for StandinTransactions and SyncedTransactions, and merge our view correlated transactions when we use them in the Stand-in Platform.

Enabling Monzo Stand-in

We’ve mentioned our ability to enable Monzo Stand-in so we want to explain what we mean. We run a Stand-in Configuration service in the Primary Platform and another in the Stand-in Platform, that for the most part are API-compatible that coordinate which platform is enabled, which components of the Stand-in Platform are enabled, and which users they’re enabled for. When we detect an outage in a service we deem critically important to our customers, we can enable the corresponding parts of Monzo Stand-in with this configuration system.

Currently we update the configuration using our Stand-in Platform CLI tooling, but you can see that with further work we can fully automate this process by triggering the configuration system on the same heuristics our engineers use manually.

This system works even if the Primary Platform is completely unavailable, and as mentioned before, the Monzo App checks both platforms periodically in the background to understand whether to display Monzo Stand-in’s limited experience. Disabling Monzo Stand-in is also a conscious decision that engineers make manually, so at the point the Primary Platform’s API becomes available again it doesn’t instantly receive all app traffic again. We’re able to roll the Monzo App back to the Primary Platform gradually.

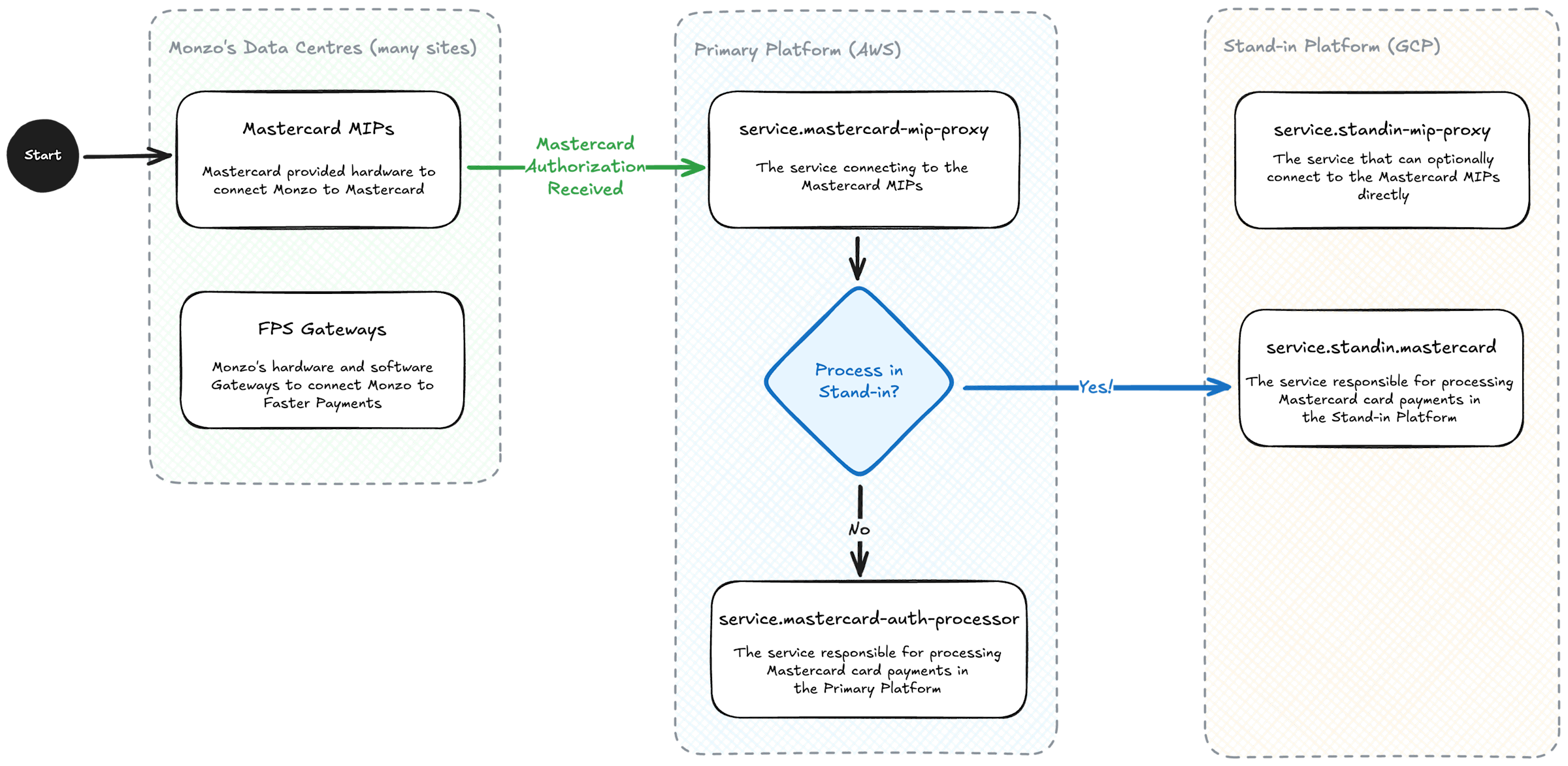

Routing payment traffic through the Primary Platform

When we enable Monzo Stand-in for payments processing, the system initially continues to route payments traffic through the Primary Platform, which proxies them to the Stand-in Platform. It might sound counter intuitive but it gives us much greater control over how many customers move to Monzo Stand-in or back from Monzo Stand-in, and for some use cases even explicitly which customers move to Monzo Stand-in, while the rest of our customer’s payments remain in the Primary Platform. It also helps us to recover from an outage very quickly.

Our system for deciding whether our payments traffic should be routed to the Primary or Stand-in Platform payments processors runs on each payment message received.

This works for most incidents but for payments processing it doesn’t work well if our Primary Platform is completely down. In this case we’re able to connect the Stand-in Platform directly to payments networks via our Data Centres. This is a bit more of a heavy-handed option for us, as we have much less control over which customers or how much of the traffic is directed to the Stand-in Platform, but we wouldn’t be resilient without the option.

Both of these routes, proxying payment to Monzo Stand-in via the Primary Platform or receiving them directly in the Stand-in Platform, are tested rigorously and continuously, in production, to prove that the system is working if we needed to use it.

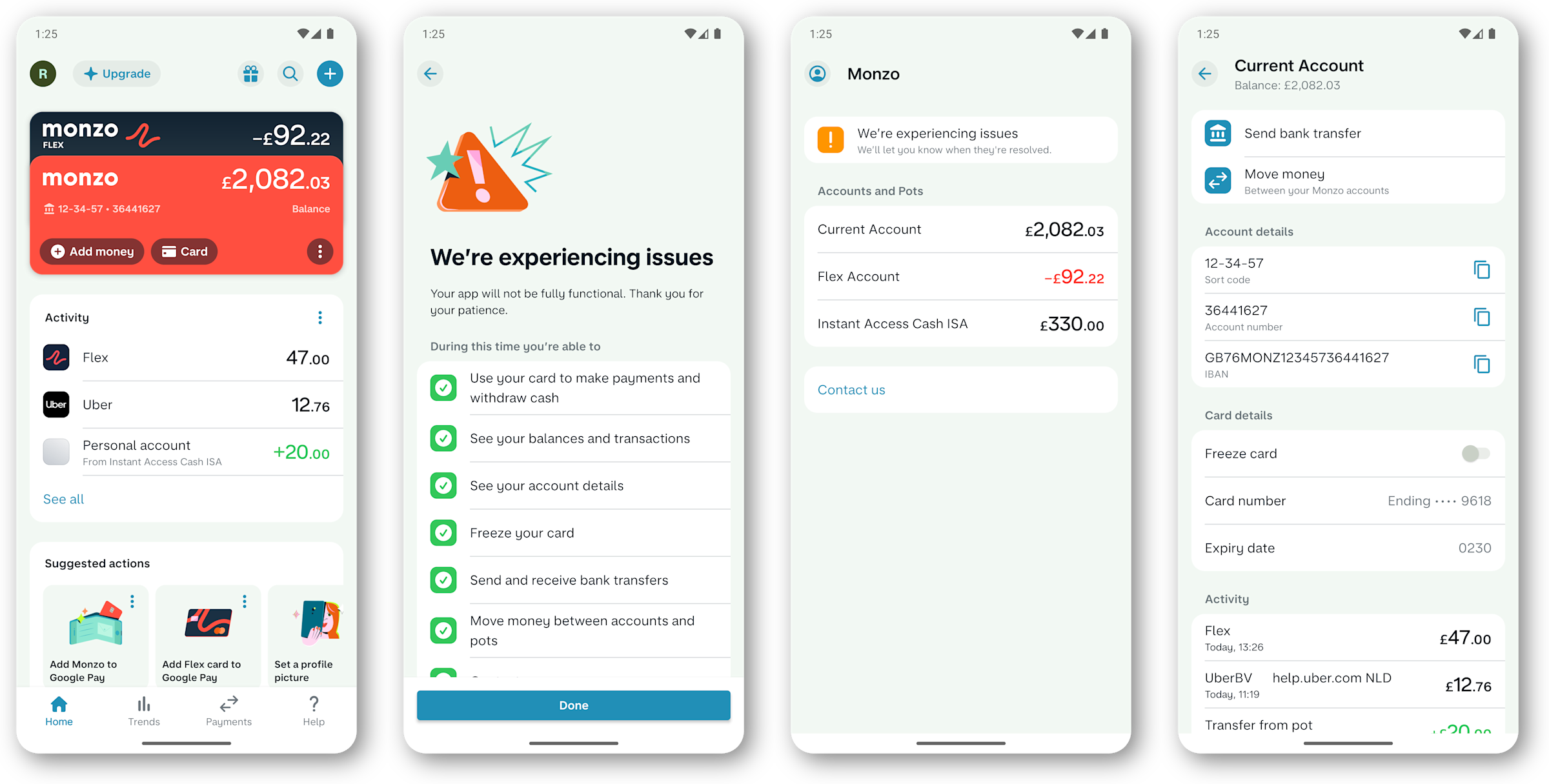

You might have seen Monzo Stand-in

In August 2024 we suffered a major platform incident that impacted most of our systems, including our ability to process payments and to serve the Monzo App. The outage itself lasted for approximately 1 hour, but we enabled Monzo Stand-in very shortly after detecting a problem to make sure our customers could still use their money.

This wasn’t the first time we had used Monzo Stand-in, and in fact for testing we always have small numbers of customers using it, but it was the first time we enabled all components of Monzo Stand-in for all of our customers. If you happened to open your Monzo App during this time you would have some clear differences to the usual experience, but the most important tasks like checking your balance, sending and receiving bank transfers and using cards continued working.

Closing thoughts, for now

Monzo Stand-in has been a huge success, helping us to prove that we’re resilient to critical platform outages in both policy and in practice. With operational resiliency at the forefront of many technical leaders and regulators minds, and with EU’s DORA and other regulations coming into force, we believe we’re leading change from the front with a practical, cost-effective, and less burdensome approach to resiliency than traditional Disaster Recovery solutions.

There’s still so much more to Monzo Stand-in than we’ve glimpsed at in this post. We intend to write more in this series on Monzo Stand-in to give deeper dives into the complexities of specific components of the system, including how payment processing works, the technical details of the configuration system, and the innovative ways we test it.

If you want to join us to build reliable and resilient systems that serves millions of customers, view our open roles in engineering!