Have you ever opened your app at exactly 4pm the day before payday to use our Get Paid Early feature? Or received a push notification about your ‘Year in Monzo’ and opened it immediately? Then you’ve potentially been a member of an effect called the stampeding herd. This is a term we use when large numbers of our customers open the app within a very short time period. If we aren’t prepared for these moments, we can exhaust our buffer capacity and can’t scale our platform up quickly enough. In the worst cases of this, shared infrastructure could become overloaded causing widespread disruption.

We’ve talked about handling Get Paid Early load before, but in this post we’ll explore how we’ve made our platform more resilient to spikes in app opens. We now have the capability to reduce load on our platform before we get overwhelmed so you can still access and use the most critical parts of the app, and your card continues to work as normal.

Why are stampeding herds a problem?

When you open the Monzo app we want you to see up-to-date data so you can manage your money quickly and efficiently. To optimise for this, we make a bunch of requests when you first open the app to preload things like balances and transactions. We make similar requests when you receive a push notification from us so the app is primed in case you open it.

Usually our platform can cope with this load just fine, but if we receive a spike in app opens, it can cause a sharp increase in load on multi-tenant services and systems like our database or distributed locks infrastructure.

Not all services we provide customers are of equal importance. However, high load from a spike in app opens could overwhelm these shared resources and cause disruption across the board, from services responsible for non-essential things like changing your Monzo app icon, right up to critical systems such as processing card payments.

We wanted to explore how we could protect the most important services from spikes in app opens, even if that meant degrading our quality of service during extreme circumstances.

How many requests could we ignore without any customer impact?

The first question I set out to answer is how much wasted work we do today. I defined this as GET requests which were made multiple times within 24 hours and got an identical response.

To measure this I added event logging for 1/1000 users within the edge proxy that all external requests come through. The events contained the full URL of the request and a hash of the response bytes so I could work out how often we got the same response to the same request, without having to worry about logging any zero-visibility data like customer PINs.

It turned out that ~70% of all GET requests to our platform had an identical twin from the last 24 hours. Some of the most common requests are pretty expensive to compute as well, such as listing transactions for the feed, or fetching data for all your accounts. If we could reliably identify even a fraction of these wasteful requests before computing a response to them then we could reduce the load on our platform, save money in database reads, and have no impact on customers.

A failed attempt

The idea of avoiding such a huge amount of wasted work was very inviting, and if we could do it with near 100% accuracy then we might be able to realise the cost saving all of the time as well.

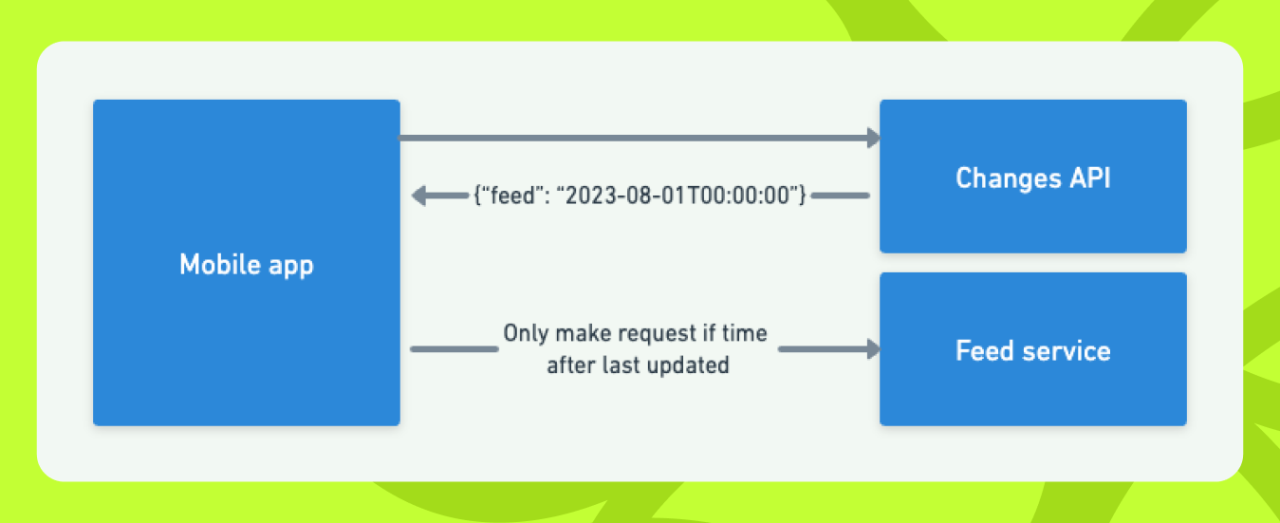

We initially explored a ‘changes API’ which would return the last updated time for our most commonly requested and expensive ‘resources’ such as account balances and transaction feeds.

The idea behind this was for clients to make a request to this API before deciding whether it needed to refresh its view of the actual resource. During normal operation, clients would request an update if its local data was less up to date than the timestamp returned from the backend, but during high load we could even tell the clients to tolerate some level of staleness.

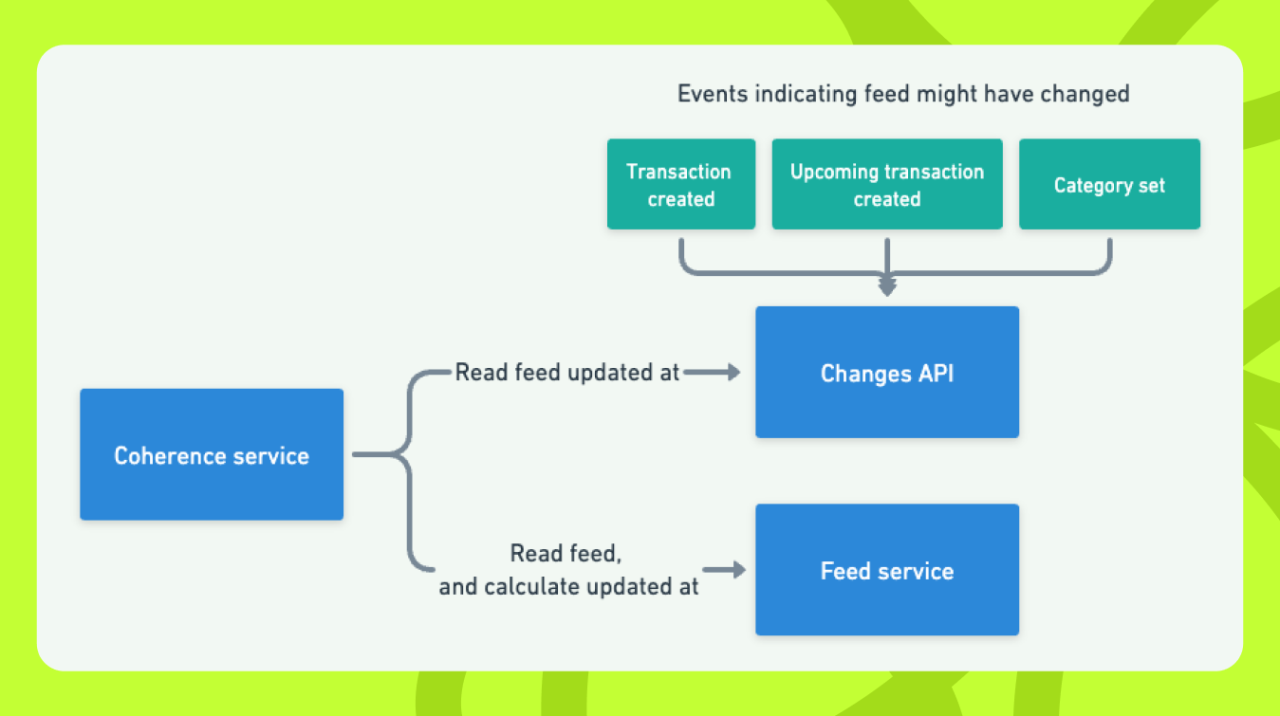

Unfortunately maintaining a completely correct and up-to-date set of last updated timestamps for resources is equivalent to instant cache invalidation, a hard problem in the world of computer science. We looked into updating our view of ‘last updated’ asynchronously by consuming events, which indicated a resource might have changed. An obvious example would be the creation of a new transaction, but a more subtle one could be having a feature flag enabled that changed the presentation of your account balance.

I say “might have” changed because overestimating how recently something has been updated just means clients would read the resource more frequently, and so wouldn’t risk showing them stale data unintentionally. However, it turned out that many of our most requested resources had some information enriched at request time, and so it was impossible to be sure that we had subscribed to all events which might cause the result of reading the resource to change.

A more practical problem would have been maintaining confidence that our updated timestamps were correct. A common pattern at Monzo is to run coherence checks for cached views of data for a sample of users at a regular frequency. In this case we would want to read the full resource and compare this to the last updated timestamp stored by the changes API. Unfortunately many of the responses that we returned to the client for these resources didn’t have an authoritative timestamp for when they were last changed (also a symptom of enriching some data at request time) and so we couldn’t reliably tell whether the changes API was correct or not.

Solving a simpler problem

Cache invalidation really is hard to solve, and generally relies on specific characteristics of each resource you want to solve it for. But can we solve a simpler problem?

It turns out that we can. If we come back to the original goal of protecting our platform from stampeding herds which could take down core services, then we don’t necessarily need a solution that we are happy to have turned on all the time, and when we do turn it on we can tolerate a small delay to the freshest data since the alternative is widespread disruption.

What if we knew which requests were coming from people opening their apps? Could we just shed those when we are under high load?

We came up with three features of a request that we thought might be good predictors of whether or not you could shed it with no impact.

Time since we last computed a response to that request - if we request the same data frequently it's unlikely to have changed.

What triggered the request - we know that requests triggered by push notifications are repeated on app open and so aren’t essential.

How long the app had been open when the request was made - perhaps you’re more likely to be updating things if you’ve been in the app for longer?

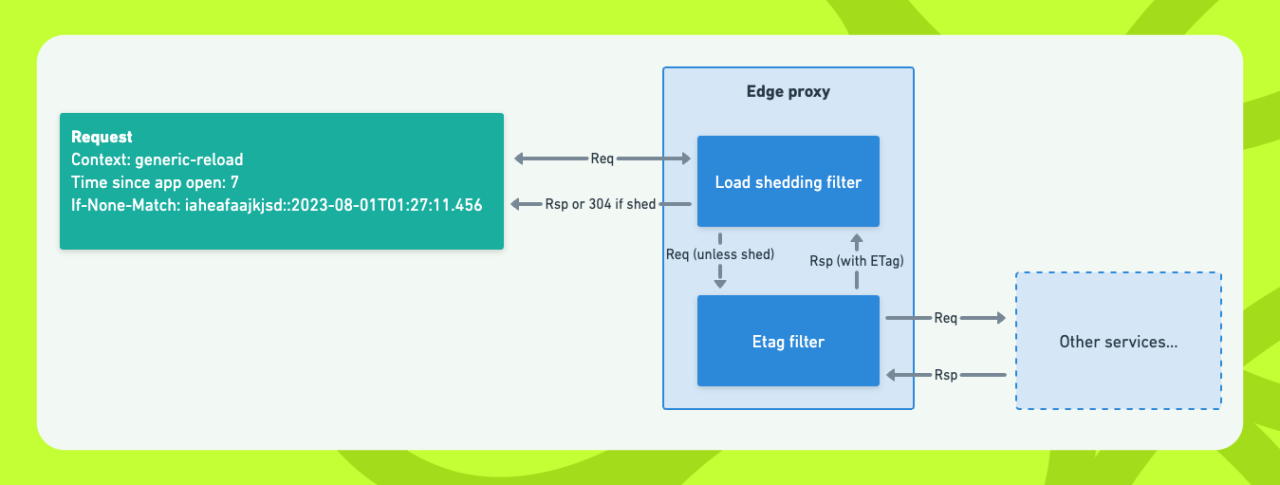

For the first, we encoded a hash of each response and a timestamp for when it was computed into the ETag HTTP header. Typically ETags are a hash of the response which clients store along with the full response. They then pass up the last ETag they received in subsequent identical requests using the If-None-Match header. If you compute the same ETag again you can return a 304 NOT MODIFIED error to the client rather than sending the whole response over the network.

For this use case we abused this header slightly to also encode the ‘last computed’ time, and we can selectively choose to return 304s without actually computing a new response to ‘shed’ a request.

We worked with some mobile engineers to add a new header to all of our requests, splitting them based on why they were being made. We used the broad buckets of:

push-notification: for requests triggered when you receive a push notification from Monzo

app-open: when you first open the app

generic-reload: when you return to the home screen

interactive: for everything else

We also added a header containing how many seconds it had been since the app came into the foreground.

I had a hypothesis that a very high percentage of requests made when you first open the app, or quickly return to the home screen after you’ve opened the app, were wasteful, since they are very frequently made requests for quite slow-changing data like your balance.

By looking at the relationship between volume of requests, and the percentage that were wasteful (using the same logs that I’d already added to the edge proxy) I was able to determine four policies with a high ‘accuracy’ for finding wasteful requests:

push notification requests which we had served within 24 hours accounted for 20% of requests and could be shed with 80% accuracy

app open requests which we had served within 1 hour accounted for another 20% requests and could be shed with 95% accuracy

generic reload requests within 10s of app open accounted for 7% of requests and could be shed with 95% accuracy

Choosing accuracy thresholds was somewhat arbitrary here, but I tolerated a lower accuracy for push notification requests since I know all of those requests are repeated on app open anyway so the worst case scenario was a user seeing some stale data for a couple of seconds.

Measuring impact

At this point, we’d proven in theory we could shed nearly 50% of GET requests to our platform with about 90% accuracy overall and it was time to see if that was true in practice.

I implemented these load-shedding policies in our edge proxy, just like the event logging. The policies work by extracting the last computed timestamp from the ETag in the If-None-Match header, plus parsing the two Monzo-specific headers we added for where the request came from and how long the app has been open. We then apply the thresholds for each request context to determine whether to shed the request by returning a 304, or whether to compute the response.

It's configurable which policies are enabled, and we emit metrics to Prometheus when each policy sheds or doesn’t shed a request. This makes it easy to work out the overall shed rate of the policies. But what about accuracy?

Unfortunately, if you shed a request, you can’t know whether you got it right or not. To make the rollout safer I implemented a shadow mode for policies, where we record what decision they would have made but still recompute the response and also record whether or not we could have shed the request with no impact. This won’t give an absolutely perfect measurement since returning a response will update the last computed timestamp on the client, whereas actually shedding it would not, but it gave us more confidence in rolling out the policies. Since we only enable the policies when the platform is under high load we can also use this to monitor the policies’ effectiveness on an ongoing basis and trigger alarms if we need to re-evaluate the thresholds for the policies.

These policies are now part of our generic load shedding framework at Monzo which lets us categorise load shedding measures based on the level of customer impact they will have and apply/un-apply them in batches.

During testing I established that we could shed almost 50% of GET requests with 90% overall accuracy. We saw a modest drop in locks taken in our platform, and a more significant reduction in reads to key database tables such as transactions and balances. Below you can see the impact on requests to the service that drives the home screen, which is one of our highest trafficked and most expensive endpoints.

It's difficult to measure exactly what the customer impact of enabling these policies is, but anecdotally it wasn’t noticeable. If we wanted to turn these on all of the time then we’d need to investigate this further, but I’m confident that the impact is much less harmful than our platform becoming overloaded and so this is a great new tool for reducing load during stampeding herds.

Conclusion

We can shed almost 50% of GET requests to the Monzo platform with 90% accuracy by examining just three simple features about the request.

Time since we last computed a response to that request.

What triggered the request.

How long the app had been open when the request was made.

This is a powerful tool for dealing with spikes in load when many users open the app at the same time. Our stampeding herds. The policies I defined can be applied progressively to alleviate load on key components by 10-30% and help avoid widespread disruption.

Working on this project taught me how important it is to define your objectives, and simplify the problem you are trying to solve in light of that. It was much easier to make progress once I stopped aiming for 100% accuracy. I also found it interesting how impactful leveraging knowledge about how our product works to choose broad categories of requests was. I think this contributes heavily to the policies not being very noticeable while in action and shows how useful it can be to combine product and platform knowledge together.

🚀 Join the team

If you're interested in becoming part of the Monzo engineering team check out the jobs below, or take a look at our Monzo careers page