We’ve shared the behind the scenes insights into how we wrote 5 million Years in Monzo, and the magic behind the data. Now we’re going to dig into how we turned this data into the Year in Monzo experience you know and love.

We’ll start with our backend approach. For those of you who don’t know, the backend is the portion of the system that lives on Monzo’s servers, and is responsible for serving data to mobile apps (the frontend) via an API which performs authorisation and business logic.

The API gives the frontend everything it needs for the Year in Monzo experience: stats from our Data team (e.g. "You're in the top 5% at Pret"), links to merchant logos and profile pictures, and shareable assets. We wanted the API to be fast and dependable so that Year in Monzo stays enjoyable instead of being a slow, frustrating experience with loading screens and errors. We'll discuss the API design later, but first, let's dig into how we achieved speed and reliability while delivering all the necessary data.

Consuming the data file

While the data team were busy providing us with the user data ahead of launch, we built a pre-processing pipeline for Year in Monzo. The pipeline ingests the user data file provided by the data team, and makes all the necessary Remote Procedure Calls (RPCs) to our internal systems to enrich the data.

Once the data was enriched, we saved it to our AWS Keyspaces cluster, allowing us to quickly retrieve the data to serve the API response.

The only things done at API read time are Authorisation, and re-shaping the data into the format expected by the apps.

By pre-processing and saving the enriched data, we saved the need to make expensive and error prone requests when serving the Year in Monzo API.

The pipeline has a fairly simple structure:

Iteration

The first step of the pipeline uses some internal Monzo infrastructure called the “RPC Iterator”. The RPC iterator has a simple task. Given an input file, loop over every user in the file and make an RPC call for that user.

We used the RPC iterator to create a Kafka message for each user. This event contains the users ID, and the raw data provided by the data team.

We chose to use a Kafka queue at this stage because it provided us with good resilience through inbuilt retry mechanisms. If an error occurred while enriching a user's data, we would automatically retry the process. If the enrichment continued to fail, the event would be placed in a Dead Letter Queue. This is where engineers can manually examine the event to understand what went wrong and address any bugs.

Enrichment

The enrichment stage begins with a Kafka consumer, consuming the events emitted from the first stage. Upon consuming an event, we have a user ID and the raw data. We then make several internal RPC calls to enrich the raw data with:

- URLs for merchant logos

- Names of top friends and URLs for their profile pictures

- Shareable image assets and getting their URLs

This enriched data is then saved to our Keyspaces database for later retrieval by the API.

The final stage of the enrichment – generating the shareable images - presented a number of challenges that we should explore.

Image generation

A key goal of Year in Monzo has been to generate shareable moments of joy for our customers. To help us achieve this goal, we decided to generate and host static images that can be easily shared.

Building the Images - Shiny and Chrome

Pre-generating these shareable images presented some interesting engineering challenges. We had to produce over 5 million images that:

Are personalised to each customer

Fit in with Monzo’s brand

Are in a format that’s easily shareable by our apps

Are easy to update so we can quickly iterate on designs

To fulfil these requirements we re-used some infrastructure from the 2020 Year in Monzo that allowed us to define our shareable images using HTML and CSS.

HTML and CSS were great choices to fulfil most of these requirements as:

Go’s inbuilt HTML templating allowed us to easily personalise the images to each customer

CSS’ powerful capabilities easily allowed us to achieve the look we desired for each image

Most developers at Monzo are familiar with HTML and CSS, allowing for quick and easy iteration on designs

Unfortunately most people don’t want to share blobs of HTML and CSS to their socials, so we also had to render this HTML and CSS into static PNG files.

To achieve this, we used headless instances of Chrome running as a sidecar container to our image generation service.

When it was time to generate an image for a user, our image generation service would process our HTML template for the current user and then connect to the Chrome sidecar using the Chrome Debugging Protocol. Over this protocol we would instruct Chrome to open a new tab, providing the rendered HTML as the page content.

Once Chrome had finished loading the page, we would instruct Chrome to take a screenshot of the rendered content as a PNG and then close the tab. The image generation service would then take the resultant PNG and upload it to AWS S3 to be later served to the apps.

In summary, to generate a shareable image, we used a small service that would take some templated HTML and CSS and fill in personalisation for a user. We then provided this HTML and CSS to a headless Chrome instance to render a PNG, which is then uploaded to AWS S3.

Running Image Generation for all users - Our OutOfMemory Era

Once we had a system in place for generating the images, we had to generate the images for millions of Year in Monzo eligible customers. This was done as a stage in the pipeline. To generate images, our pipeline made a synchronous request to our image generation service to create the images.

On the first run of the pipeline for all users, everything was seemingly going well for the first 20 minutes or so, and then we got an alert that one of our pods had been killed as it was out of memory, then another and then another, and soon our pipeline had ground to a halt.

On inspection of our containers and metrics, we noticed our Chrome instances used steadily increasing amounts of memory as soon as we started making requests, until they hit their memory limit and were killed. We also noticed the time to generate an image increased as the memory usage increased.

After some digging we theorised that we didn’t have enough Chrome containers running for the number of images we were trying to generated. Having too few containers resulted in each Chrome instance being instructed to open many tabs. As more and more tabs opened, Chrome used more memory causing a slow down. As Chrome slowed down, the number of tabs that had been closed slowed down causing an even larger tab backlog until all of the memory was used, causing the Out Of Memory errors.

The problem was made worse when one container crashed, as its workload would be distributed to the remaining Chrome instances, increasing the load on them, causing a cascade of Out of Memory errors.

We had several options to work around this problem, we could:

Slow down our pipeline, reducing the load on Chrome

Increase the number of Chrome replicas, increasing platform load and cost

Re-architect our solution to generate images based off a work queue

Due to time constraints, we didn’t want to slow down our pipeline, we had an internal target of been able to run the pipeline in under 1 working day. We were also approaching our target for having development complete on Year in Monzo, so we didn’t want to re-architect.

As such, we chose to scale up the number of Chrome instances to reduce the amount of load on individual instances of Chrome. We scaled from around 120 instances up to 600 instances of Chrome. This eliminated most of the Out Of Memory errors, and meant if one did occur, we didn’t get a cascading failure as the excess load was distributed across many Chrome instances.

However, we were now running so many instances of Chrome, we were using a significant portion of Monzo’s compute resources. This initially caused some issues for our Kubernetes cluster, but we worked closely with our fantastic platform team to ensure we had appropriate options set on our Kubernetes manifests, and scaled in a way that would ensure there was no impact to other services in Monzo.

After scaling Chrome, we were able to successfully run the pipeline for all of our users in about 5 hours 🎉

In retrospect, we will likely explore different options for image generation for future projects. In this instance we made a trade off between development speed and operational complexity. We found it very easy to develop the shareable images in HTML and CSS but had to spend significant time and resource debugging and operating the headless chrome sidecars.

As image generation is the final stage in the pre-processing pipeline, we now save the data into Keyspaces and we’re ready to accept API calls!

Flexible API

The ever-evolving landscape of design and brand storytelling during the early stages of this project meant that adaptability is key. We understand the significance of presenting our Year In Monzo stories in a way that not only captivates our users but also aligns seamlessly with our evolving brand aesthetics. This is precisely why we invested time and effort in building a flexible component, providing our brand and design teams with the freedom to curate narratives that resonate with our audience in any order they so wish.

Ensuring flexibility within reasonable timelines

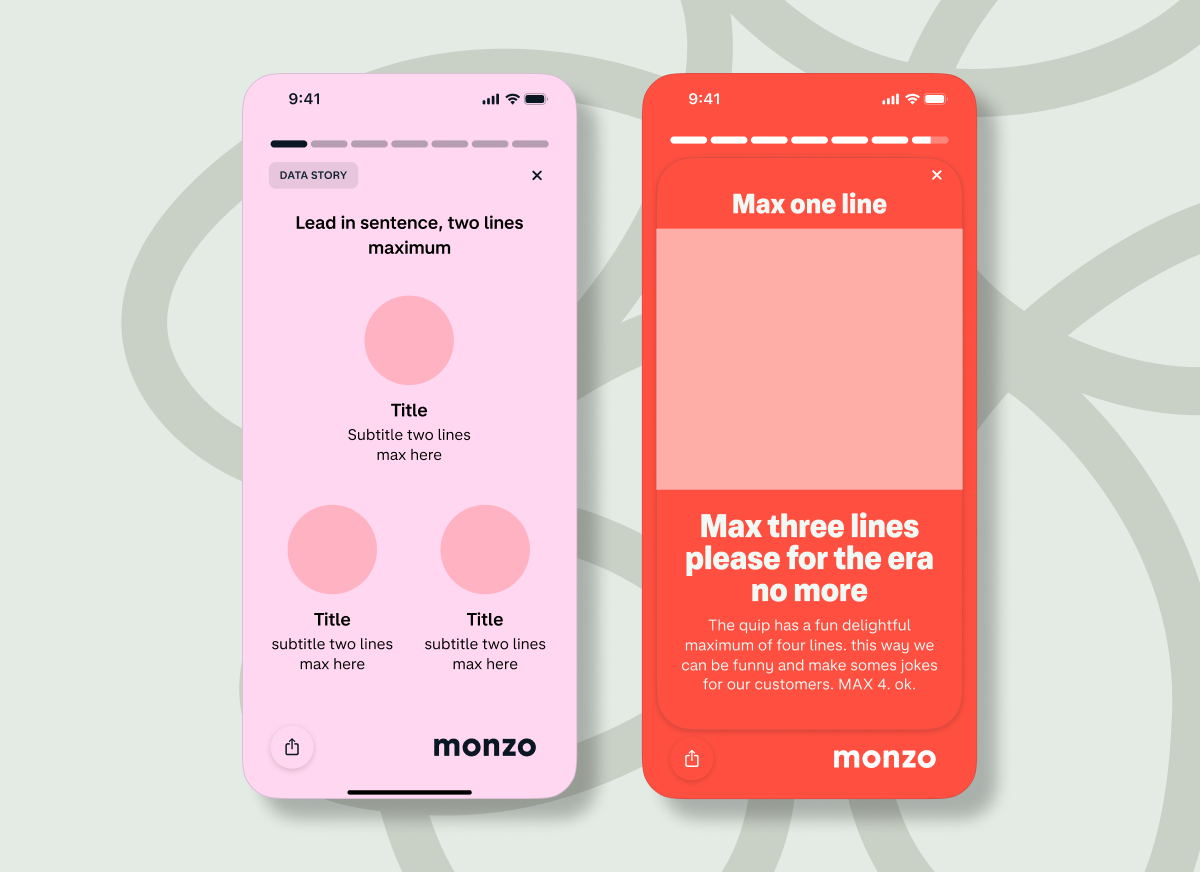

A rigid API structure could limit our creative team so instead we came up with an API design that added some light design and implementation restrictions, only to ensure the number of use cases and edge cases were limited in the short period of time we had. The majority of the restrictions were to support accessibility and some restrictions are; line lengths, image sizes, optional fields. We applied these restrictions whilst also designing an API that allowed the creative team to create as many stories as they want.

With this in mind, one of our main in-app focuses was around performance. Aiming for a smooth and enjoyable experience on every device or OS version we currently support.

The three pillars: templates, animations and Lottie magic

The core of our flexible API revolves around (originally) three distinct story templates, each crafted to offer a unique storytelling canvas. These templates not only house specific native animations but also come with predefined restrictions, ensuring a harmonious blend of creativity and coherence.

Native Animations:

Tailored to our brand identity, these native animations serve as the visual language of our stories. From subtle transitions to eye-catching effects, they provide a dynamic backdrop to the user's financial journey.

Restrictions:

While freedom is essential, a touch of structure ensures a cohesive user experience. The predefined restrictions within each template act as guidelines, maintaining a consistent look and feel across diverse stories.

Lottie Animation:

Adding an extra layer of magic, the Lottie animation in the background synchronises with the native animations. This not only enhances visual appeal but also elevates the storytelling experience, making it more immersive and engaging.

Future-proofing creativity

The beauty of our app implementation and flexible API lies in its scalability. The backend can return as many stories as needed for the experience we wanted to deliver. When a new story was added, it had to follow the pre-defined templates. This meant we could iterate on the experience without any frontend changes.

Furthermore, by establishing a foundation of versatile templates, we've not only met our current design needs but also paved the way for future expansion. This approach allows us to introduce new templates when necessary and explore innovative avenues in the future.

A Behind-the-Scenes Look at Preloading Capabilities in Our App

Our Stories are a visual treat, boasting a mix of captivating animations files and vibrant images. All of this content is served from the backend, making it imperative for us to find a solution that eliminates the awkward appearance of images mid-motion, especially when your internet connection is playing hard to get.

Here's a quick breakdown of the challenge:

Loading and displaying Lottie animations

Downloading the images used in the animations

Our approach: fetching all before you dive in

Imagine this: You click into your Year In Monzo, and instead of a loading spinner, you're met with a seamless transition into your Story. How do we achieve this magic?

Loading Screen Magic:

As you first enter your Year In Monzo, a loading screen reaches out to the Year In Monzo API to download your very own Year In Monzo.

Fetching Assets:

Here's the real wizardry – we fetch all the Lottie files and images, ensuring they're preloaded and ready to dazzle you in the flow.

Retry Policies:

We understand that the internet isn't always in our favor, so we implemented a retry policy for every downloaded asset. Each image and lottie animation gets a minimum of 3 attempts before we kindly ask you for a retry. This not only tackles connection-related issues but also keeps you informed about the hiccup.

User-Friendly Errors:

If a download fails due to non-internet reasons, we gracefully handle it. A tracking event fires, we monitor these so we can quickly take action to fix them, and the story seamlessly moves on, preventing a single failure from disrupting your entire experience.

Balancing the pros and cons for a seamless experience

Pros:

Caching:

All assets are cached, eliminating internet connection issues during your Year In Monzo journey.

No Loading Spinners:

Bid farewell to those frustrating loading spinners disrupting your immersive experience midway.

Cons:

Error Overhead:

Fetching multiple assets translates to numerous API calls, increasing the chances of encountering an error. We've got retry policies in place, but hey, the internet can be a bit finicky sometimes.

Batching Challenges:

To manage the API calls, we batch requests (around 5 at a time), ensuring a smoother loading experience but at the cost of a slightly longer wait.

Building a familiar Stories Component

We were mainly focused on creating an engaging and interactive experience that would keep our users hooked. One familiar suitable experience is the Stories, popularized by platforms like Instagram. In this blog post, we'll take you through the technical journey of building an Instagram-like Stories component that met our specific requirements.

Requirements

Our goal was to create a native Stories component that is both well-defined and flexible. We needed the backend to have the capability to drive some of the behaviour but also have pre-determined behaviors for each template. Additionally, our Stories had to support various elements, including:

Story Templates:

Well-defined templates that allow for flexibility in content presentation.

Background Animations:

Support for Lottie background animations for visually appealing stories.

Tap Navigation:

Familiar navigation gestures for users

Sharing Functionality:

The ability for users to share stories seamlessly.

Performance considerations

Creating a performant Stories component requires a solid foundation that balances user experience with technical considerations.

Synchronization of Animations

Maintaining synchronicity between native animations and the progress of the story was a key challenge. We needed to ensure that visual elements, such as background animations or interactive components, progressed seamlessly alongside the story's timeline. This synchronisation contributed to a polished and cohesive user experience.

Device Performance

To guarantee a consistent experience across devices, we paid special attention to performance on lower-end devices. Optimising resource usage and minimising the strain on device resources were critical considerations. This involved testing and refining our implementation to provide smooth interactions even on devices with limited capabilities.

Lazy Loading for Resource Efficiency

Efficient resource management was a top priority. We implemented lazy loading to load the content of each story only when it was about to be displayed. This approach minimised the consumption of resources before they were actually required, ensuring a streamlined and resource-efficient Stories flows.

Native animations + Lottie Animations = ❤️

We built each story experience with a mixture of native and Lottie animations. Our native animations prioritise accessibility and performance, ensuring a smooth and inclusive experience. While Lottie adds that extra piece of Monzo magic and flair to the experience.

Engineering, design, and motion worked together to build a specification of how each template was to work which included timings between native and Lottie. They are then run in sync with one another to create the experience.

Android UI was powered by Jetpack Compose. Compose makes animations and transitions far easier to build and manage. Using a combination of Animatable, Animated Visibility and Transition to build out all the animations you see. With Coroutines powering the animations, the animation states and memory management is pretty much handled for you.

To avoid duplicating the experience for accessibility we split each template in chapters. This helped us to orchestrate the animations such that we could run them either after an animation has finished or via a button interaction. Here is an example of this:

enum class TemplateTwoChapter : TemplateChapter {

RunLeadInContent,

ShowPresentationItem,

HidePresentationItem,

RunPodiumList,

}

iOS animations were powered by property animators. Property animators give us more control over the animations and their states and make it easier to chain animations and control their individual states.

final class TemplateTwoView: BaseTemplateView, StoryViewComponent {

leadInContentAnimation.startAnimation()

presentationItemAnimation.startAnimation()

presentationItemOutAnimation.startAnimation()

listAnimation.startAnimation()

}

Accessibility

The importance of accessibility cannot be overstated. Our aim was to make Year In Monzo accessible for every one of our users so we looked at how we could make that happen with different accessibility features turned on.

Screen Reader-Friendly Experience

For users who rely on screen readers to navigate their apps, we understand the significance of a seamless experience. We worked on providing descriptive view elements, intuitive navigation, and clear, concise information. Not only reading out a description of the content but also with information that describes the experience too.

We invite users who rely on screen readers to share their experiences us. Your feedback is invaluable in helping us refine and enhance our approach to accessibility, making sure that every user feels included.

Reduced Motion Options

Motion can be a challenge for some users, causing discomfort or even hindering their ability to interact with digital interfaces. Recognising this, we've incorporated a reduced motion option within Year In Monzo. This feature minimises unnecessary animations, creating a more comfortable experience for users who may be sensitive to motion effects.

Given Year In Monzo is primarily an animation based experience, we looked at binding both screen readers and reduced motion into one experience to ensure we provide a great experience in the limited time we had.

Your comfort matters to us, and we want Monzo to be an enjoyable experience for everyone. If you have suggestions on how we can further improve the reduced motion experience, we're all ears! Your insights will guide us in making ongoing adjustments to ensure that our platform accommodates a diverse range of needs.

Responsive Font Scaling

Clear and legible text is fundamental to a positive user experience. Year In Monzo employs responsive font scaling, ensuring that text remains easily readable across various devices and screen sizes.

To sum up, we designed and built a flexible API, that applied minimal restrictions to support accessibility and allow our creative team freedom, whilst helping us hit our deadlines. We invested heavily in ensuring the user experience is as good as can be, using pre-loading to make the experience seamless and using animations for that extra touch of Monzo magic.

And that's a wrap (no pun intended 😉 ) on our Building Year in Monzo 2023 blog series! Thanks for all the customer love – and feedback. We've loved every moment and we hope you did too.