The ledger is where we keep track of all customer money movements coming in and out of customers’ accounts. It’s the bank’s double entry bookkeeping system. As more customers use Monzo, more transactions run through the ledger and are recorded as ledger entries. As the number of ledger entries skyrockets, we have to ensure that the time taken to read the entries required for balance calculation continues to be fast.

In June 2022, we started a project to speed up the ledger balance read time. It was a complex project because all payments and product services depend on balance calculations to maintain accurate financial information. We needed to make sure the calculations would continue to be correct as we made these improvements.

So this is the first of a two-part series about this project. In this post we’ll cover the planning and experiments we ran, and in the second post we’ll follow the testing and implementation.

Keywords: Balances, ledger address, and DB reading time

Before getting into the detail, it’s helpful to know some common terms you’ll see in this post.

Ledger address: A customer ledger address is an entity under which double entry style debits and credits are grouped. In traditional double entry accounting, a ledger address is analogous to a ledger account — however we deliberately do not use the term "account" as this term is used more specifically within Monzo.

An address is a 5-tuple of (legal_entity, namespace, name, currency, account_id)

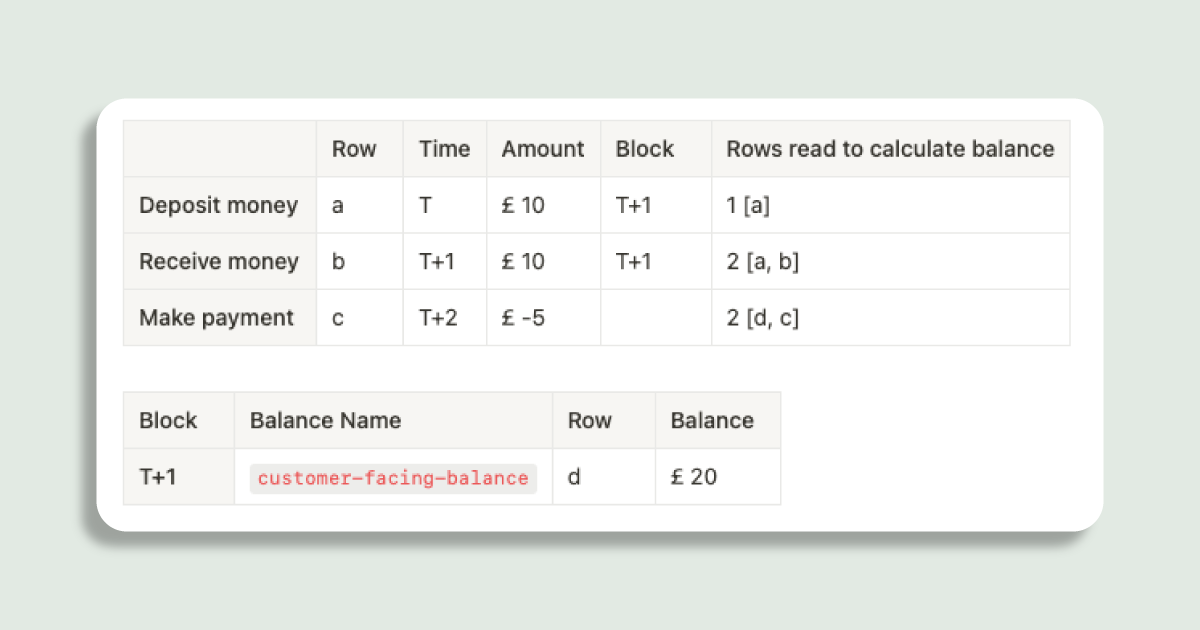

Balance names: indicate what the common use case is for a balance. The most common used one is customer-facing-balance which is the balance you see in your main account in the app.

Database read throughput: is a measure of how many queries a database can handle within a fixed period of time, and is typically expressed in rows per second or queries per second. A higher throughput means that the database can process more queries in a given time period, resulting in faster access to data and better performance for the application.

For more information about the above terms, have a look at our previous post on how we calculate balances.

Balance read time was reaching a tipping point

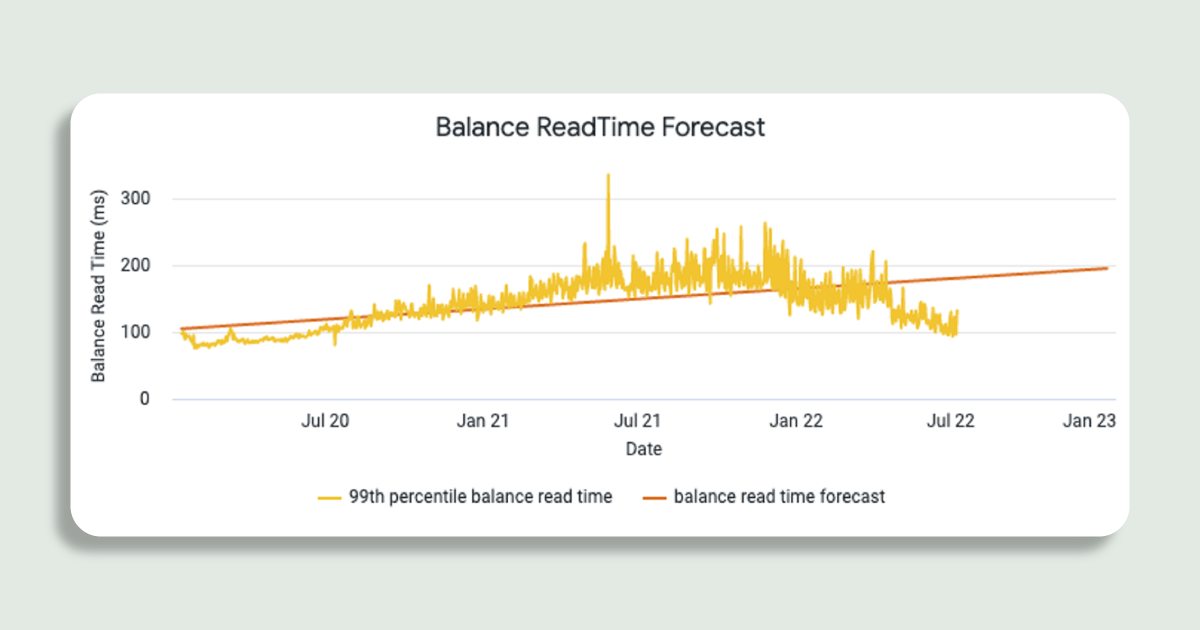

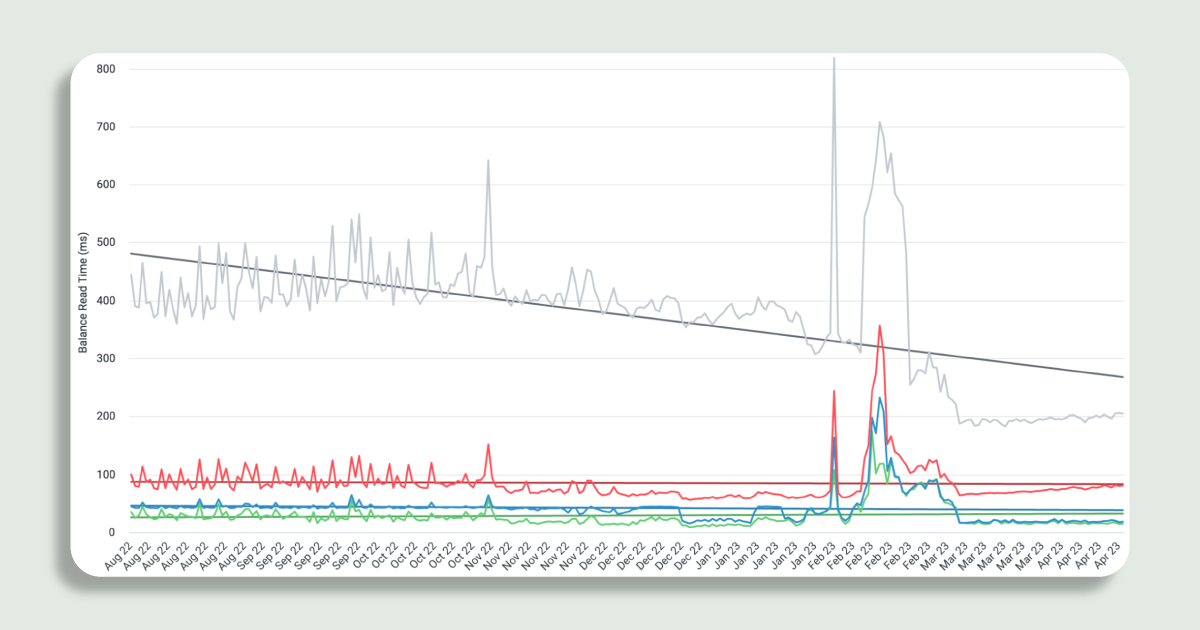

As Monzo’s user base increased over time, we saw an associated increase in the read time for balances from our ledger.

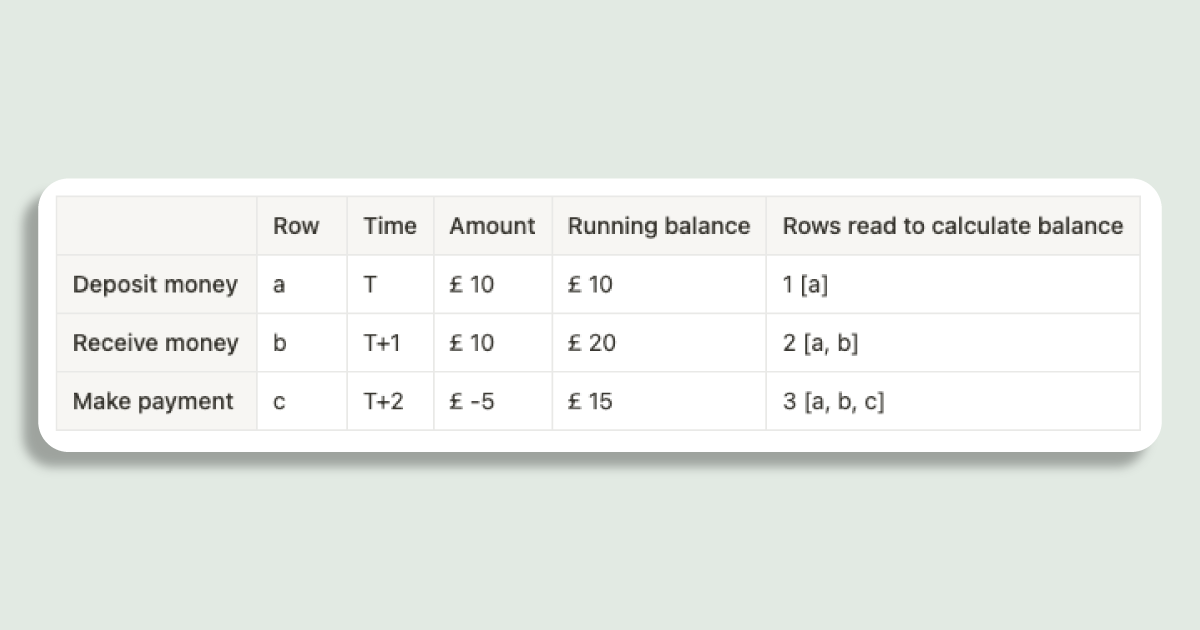

Balance calculation involves reading all entries in the addresses group that form a balance name and then adding all of them up. This means balance calculation time will increase proportional to the growing number of Monzo customers and transactions.

Slow balance calculation is bad

There are many time-sensitive processes that depend on the ledger, most notably card payment processing.

When Monzo processes a card payment, the card payment network may authorise the transaction on behalf of Monzo as a back up called “stand-in processing” without waiting for Monzo’s confirmation.

If the balance read times are long during payment processing and falls back to stand-in:

we might not be able to check if the customer has sufficient balance to make a payment and authorising a payment too large could take customer’s balance into negative unexpectedly

we might not be able to complete all fraud checks involving balances in time before we can make an informed decision

This puts our customers and Monzo at risk as we have little control over the authorisation decision.

⚖️ Scope of our solution

We wanted to design a scalable solution so that we not only support balance read time in the ledger today, but also in future as Monzo’s customer base grows.

As discussed in How we calculate balances at Monzo, we have different types of balances. Our core balances are ones that are most commonly used, such as the customer-facing-balance used in payment processing.

If we can’t speed up balances for all our use cases, we’ll focus on speeding up the balance read time that has the most impact for our time-sensitive processes. This means the solution needs to improve balance read times of our core balances.

Discovering different ways of speeding up ledger balance read time

Calculating balances in the ledger is divided into two steps:

Fetch the ledger entries to calculate the balance from.

Sum the amounts of all of the entries.

By monitoring ledger behaviour using our service metrics, we’ve found the majority of balance read time is spent reading entries from the ledger database.

So to reduce the time spent on reading ledger entries, we could either:

increase the read throughput for balance calculation

reduce the volume of data read from storage for balance calculation

While we saw that increasing read throughput could be effective, it is fundamentally unscalable because the number of ledger entries to read is always increasing. Reducing the volume of data being read was the way to go.

A balance changes whenever a customer makes a new transaction, but a balance in the past remains the same, so we can calculate a new balance by either summing the amounts of all entries or by adding the amount of the new entry to the previous running balance without reading past entries.

The challenge is to find a way to efficiently and correctly storing the running balance to reduce the number of ledger entries we need to fetch for a balance calculation.

Pre-Computing Balances in Blocks

group consecutive ledger entries into what we called a block

store the sum of those ledger entries balances in a separate database table for quick look up

use balance names as the primary key for the table

only store the most-used balance names as these represent the balances which need to be quickly read

To calculate a balance, all we need to do is to read a block with the balance name.

Reindexing ledger entries table to a time series

Prior to this migration, we used a table schema which did not include a time component. This meant that partitions in our Cassandra data store kept getting larger, making read queries inefficient. To mitigate this, we needed to include time element to storing entries.

As part of this migration, we reindexed our ledger entries into a time series table. A time series table stores data partitioned by a time bucket (range) column from a time column (in our case we chose the committed_timestamp field), combined with other columns (in our case we chose address columns).

The above schema allows us to

👉🏼 Filter by committed timestamp of an entry. Given committed timestamp and its corresponding ledger address, we can retrieve all entries that has been recorded since that timestamp.

👉🏼 Partially read entries given their committed timestamp, in other words, filter out entries outside of the time bucket, efficiently skipping irrelevant entries.

🧑💻 Test service to imitate the ledger

Before we conducted the reindexing in our live systems, we ran some experiments to find the optimal bucket size for our Cassandra data store whilst being able to provide all the functionality our ledger service provides in an efficient manner. The bucket size is the time interval on which one partition will include data in. For instance a bucket size of three months, means three months worth amount of entries in one data store partition.

We didn’t want Monzo customer’s experience to degrade by carrying out tests and experiments on such a critical service to validate our hypothesis and experiments, so we’ve decided to test our ideas on a mock service that has similar characteristics and data access pattern as our primary ledger service.

⚒️ Deciding the optimal solution

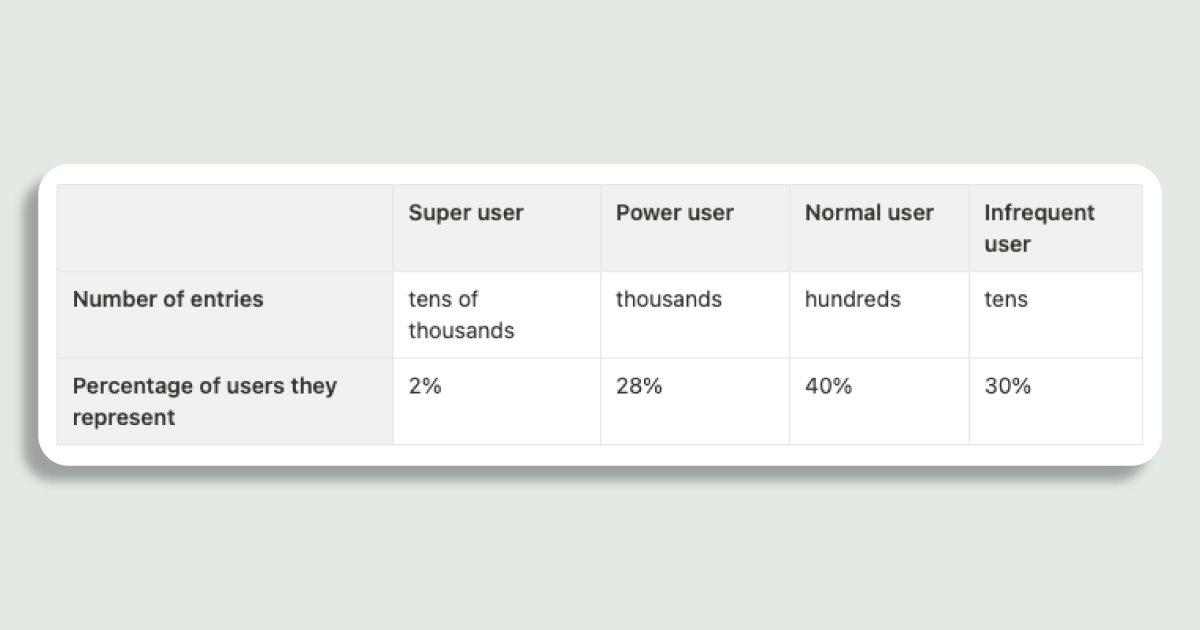

We identified four primary categories of users, categorised by the number of ledger entries they have in their accounts — i.e the number of transactions they have done since they’ve opened their accounts.

In the testing service we have randomly generated entries for each category, compared how our proposed solutions perform, finally chose the solution with the best trade off.

🥼 Experimenting with different options and finding a winner

We developed an RPC endpoint in the testing service to read the customer balance. We ran that endpoint thousands of times, using three different bucket sizes (3 months, 6 months, and a year) for every user type mentioned above. We found that there wasn’t a clear winner for all the user categories.

We converged on an approach in reading from our time series table where we would spawn multiple concurrent threads to read different buckets concurrently to speed up execution. This approach worked best from our experimenting for super, power, and normal user type.

We ran the migration in March 2023 and alongside some other infrastructural changes, we saw a decrease and stabilisation of our P99 latency.

⏩ To be continued…

In the next post, we will cover how we ran the migration in an online manner, including running continuous coherence and validation checks to make sure the migration did not compromise on correctness.

During the migration, we also implemented the ability to split partitions, to keep the volume of data in each sub-partition small. We will go into our decision process for choosing shard counts for different ledger addresses.

Stay tuned 😉

If you're interested in any of the work we're doing at Monzo, check out the roles below, or head over to our careers page