We run thousands of microservices at Monzo and our architecture allows us to continually deploy new and improved features for our customers to enjoy. This benefit however comes at the cost of complexity. A single API call can spawn a distributed call graph covering a multitude of services and each of these services may be owned by a different engineering team.

In this post we'll focus on how this complexity impacts the development experience and what we’re doing to tackle this challenge. Read on to see how we’re enabling our engineers to develop and debug their services locally so that they can move with speed and safety as Monzo continues to grow 🚀

Background

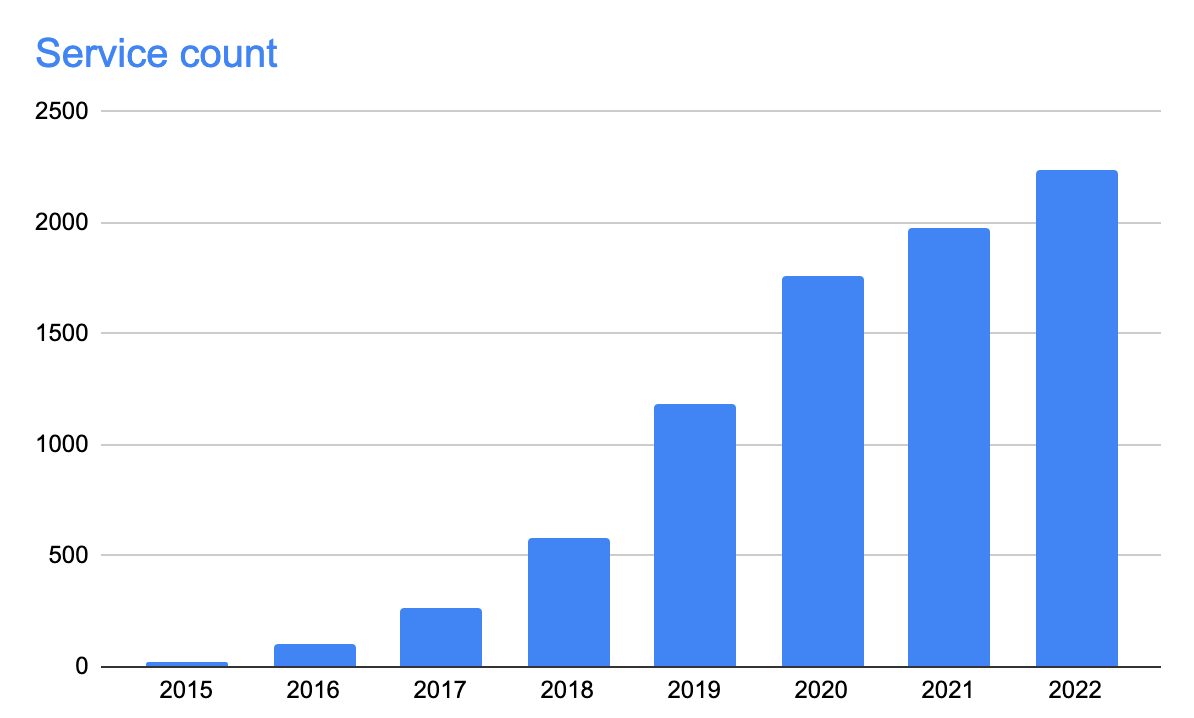

In the chart below, we can see that in Monzo’s early days there were relatively few services. So few that it was possible to run them all on a laptop.

We used a tool called Orchestra to spin up services in the right order and configure them. We could also choose to run only a subset of our services.

By 2018 Monzo had grown dramatically and so had the number of services. Orchestra had reached its limit:

Running all services on a laptop was no longer an option

The call graphs between services had become much more complex and dynamic, making it difficult to determine the minimal set of services that were required to perform an operation

As the overall number of services increased so too did the probability that any one service was misconfigured. Local configuration settings are very often different to those in other environments and it’s easy to forget to update them.

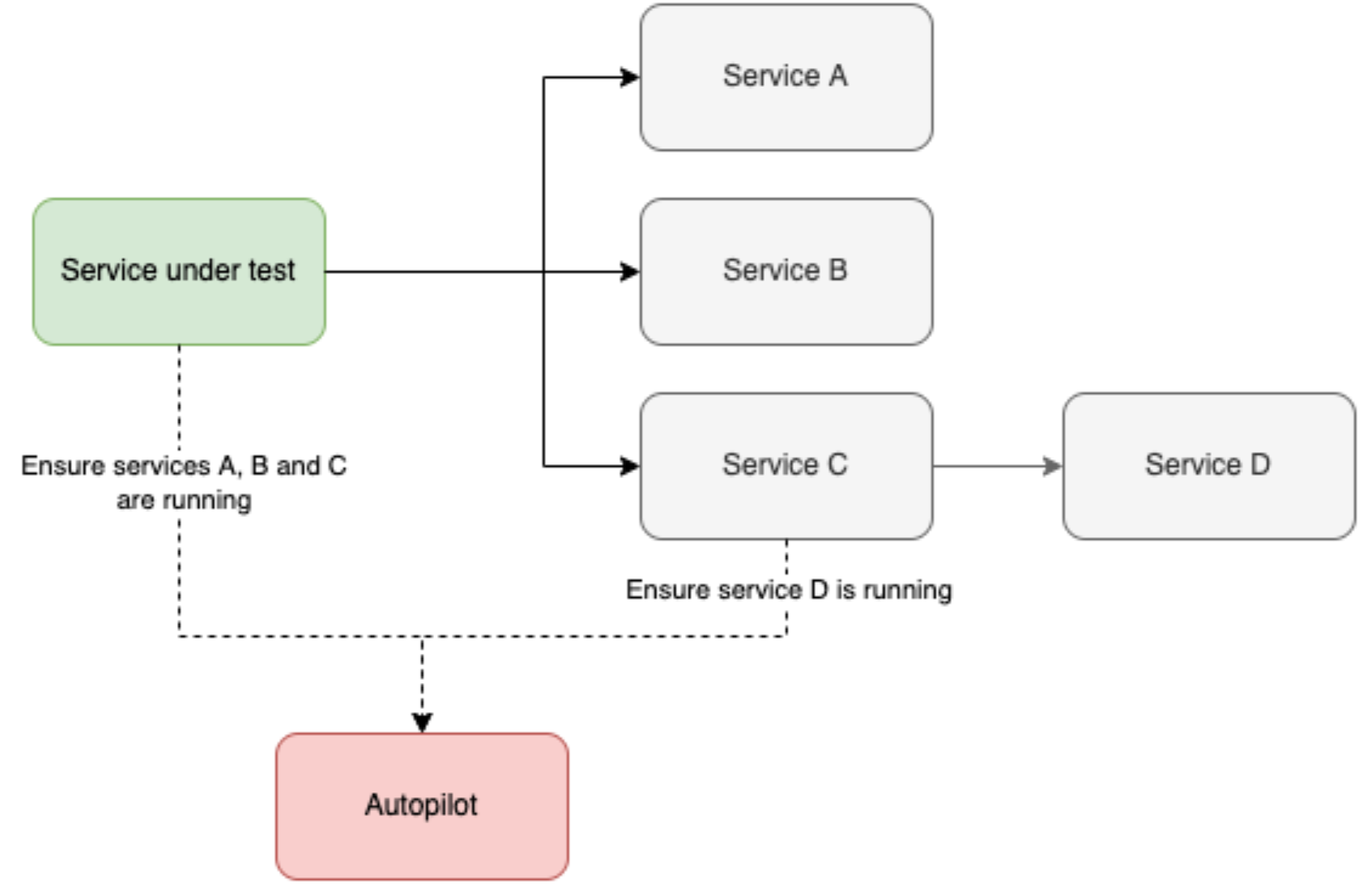

Thus a new system called Autopilot was born. In a nutshell, as a local service made outbound requests they were intercepted and Autopilot would start the requested service.

Unfortunately, Autopilot also ran into issues of scale and it fell out of favour (more on this later). As a result, alternative development workflows emerged.

Some engineers followed TDD at all times, mocking dependencies. This is a great practice but it does have drawbacks:

For larger bodies of work, where it’s not initially clear how the final code should look, you not only end up reworking your code many times but also your tests. This can be a slow and frustrating process and at times it’s much more efficient to write tests after code has been manually tested and refined.

Additional testing is still required because mocks may become incorrect

Other engineers preferred to write unit tests later in their process and during feature development they may repeatedly deploy to our shared staging environment. This too can be inefficient:

The shared environment drifts from being production-like due to the continual deployment of changes which aren’t yet production ready. This can easily lead to instability, which slows everyone down

Contention occurs when multiple engineers want to work on the same services in parallel

It’s slow compared to testing everything locally since a remote deploy is required

Debugging remote processes can be painful as it’s hard to attach an interactive debugger

The problem

We found that Autopilot ended up suffering from a similar problem to Orchestra. Most services require some form of configuration and the configuration required to run a service locally is often different to the configuration required in other environments. Also, many services depend on the existence of certain seed data. This means a human not only has to initially define local configuration and seed data but maintain it as a service evolves.

We observed a pattern with Autopilot which in the end meant that it became unusable in many cases. It started when an engineer couldn't run their service because a dependency failed due to issues with its configuration and/or seed data. After the engineer ran into this problem a few times they gave up trying to run their services locally, which understandably led to them losing all motivation to maintain the local configuration and seed data for their own services. This behaviour in turn compounded the problem because it meant that even more services have incorrect local configuration and seed data and the poor experience cascaded.

This suggests that even if we were to give each engineer a dedicated cluster, so that they could run all services concurrently we’d likely experience the same problem. Whether we use Autopilot or have dedicated clusters, the challenge is the same. Many of the services which run within the development environment must be configured specifically for this environment.

Solutions

As it happens, there are ways to sidestep the configuration/seeding problem. We could:

Option 1: Allow locally running services to call into our staging environment

Option 2: Provide an easy way for engineers to fake their dependencies

Routing requests into our staging environment is conceptually simple and it solves some of the main inefficiencies associated with the workflow that emerged where engineers frequently deploy to staging. This has it’s own set of limitations:

Engineers must be online

If a service is broken in staging then it may prevent people from testing local services which depend upon it

The version of a service in staging may not be the same as its corresponding version in production. This could mean tests which exercise this service may be misleading

In order to manually test a service, all of its dependencies must be deployed to staging. This limits our ability to develop groups of new services in parallel

There is limited scope to test failure modes because it may be difficult to force some dependency to fail in specific ways

If it’s easier to route requests to the shared staging environment from services which are being actively developed then this may increase the risk of data becoming corrupt in staging

Let’s now consider how things would look if we faked our dependencies. This wouldn’t suffer from any of the limitations associated with routing requests to staging, however:

Some level of effort is required to create the fakes

Over time a fake may cease to represent reality. This could mean tests which exercise this fake may be misleading.

Both of these solutions feel pretty nice in different ways. We also discovered that Lyft were already doing both and this increased our confidence in their potential.

Devproxy

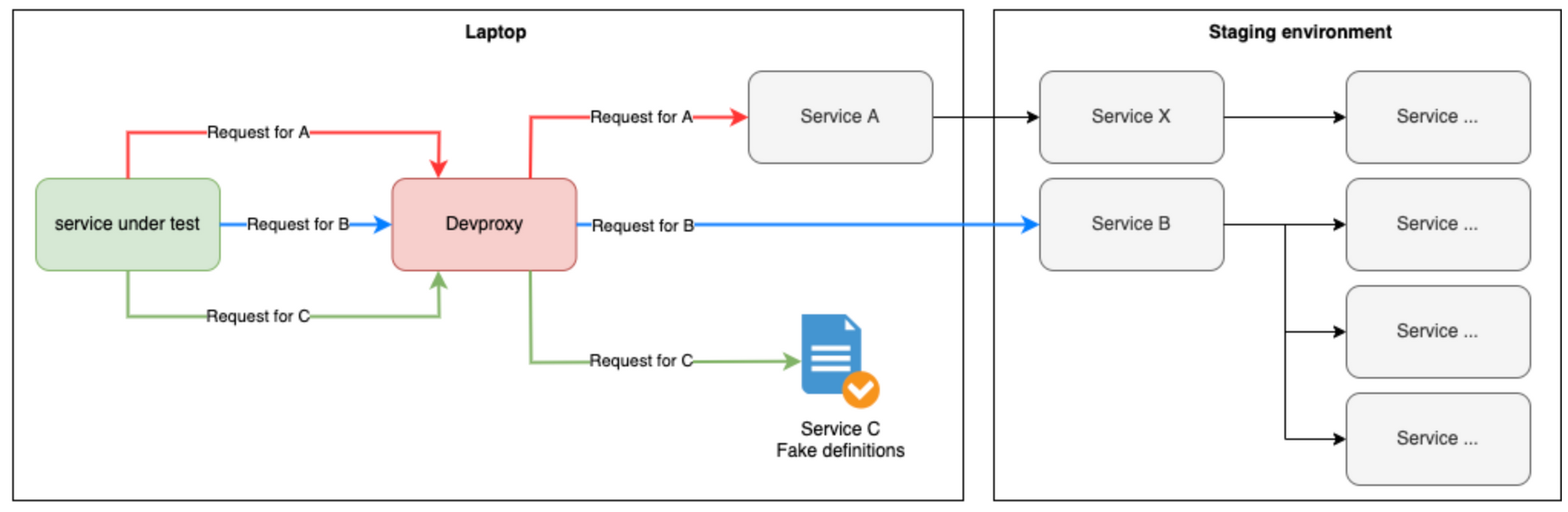

Devproxy is our latest attempt to improve the developer workflow at Monzo. This builds upon Autopilot and offers the following headline features:

Requests can be routed to services in our staging environment

Support for service virtualisation, so that engineers can easily create fake endpoints

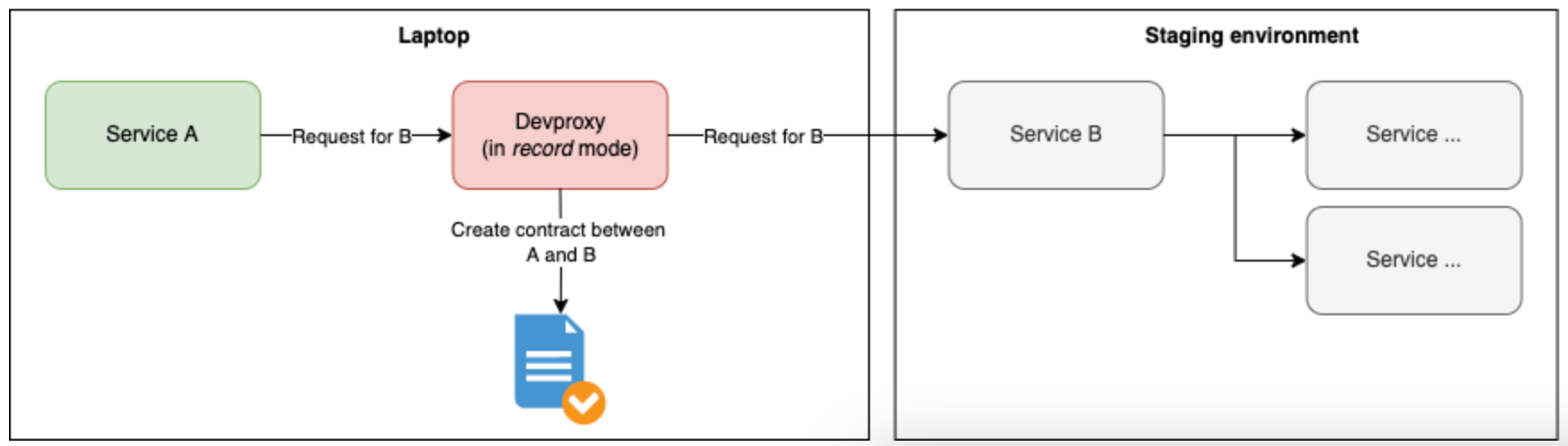

Here we see how requests may be routed when Devproxy’s in the picture.

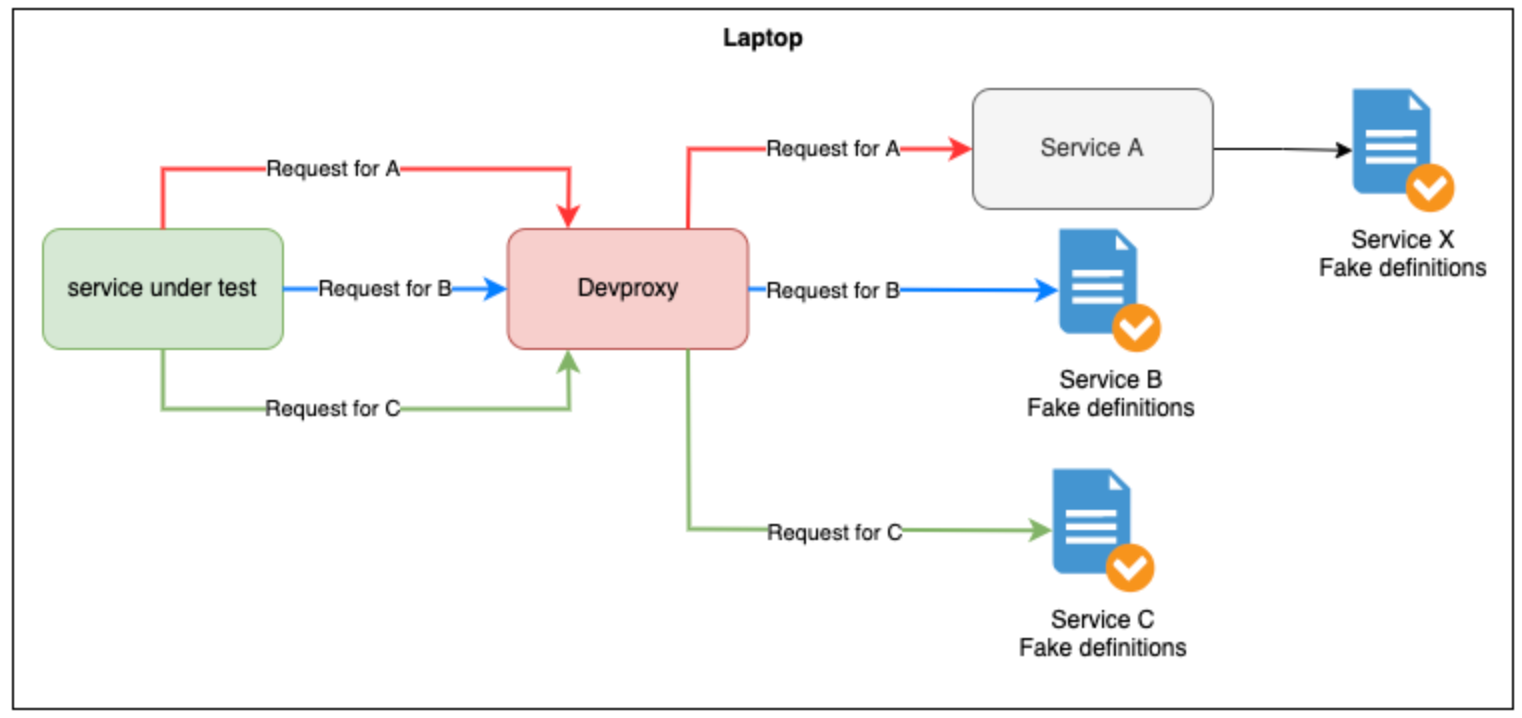

Service virtualisation

Service virtualisation is a technique which can be used to quickly emulate services. It’s similar to mocking however emulation is performed at a higher level and is specifically aimed at service APIs.

In the previous diagram, we saw requests being routed into our staging environment. While this ability is great and it saves engineers time on deploys, things are still pretty complex. There are loads of network calls and any one of these could fail for a variety of reasons (e.g. a buggy dependency might be deployed).

When you’re testing a service you typically don’t care how its dependencies work, you just care that if you pass them a request they give you back an acceptable response. This means we can simplify things massively by faking our dependencies. These fakes don’t change without notice nor do they make their own network calls. Fast and deterministic 🚀

Faking made easy

One of the downsides of service virtualisation was mentioned earlier; some level of effort is required to create fakes. While it’ll always be true that some effort is required, there are ways we can make things easier.

Devproxy can operate in a number of modes, one of which is record. When in this mode, in addition to routing requests to the appropriate destination the requests/responses are encoded into contracts (the reason behind this name will become clear later). These contracts can then act as definitions for fake endpoints.

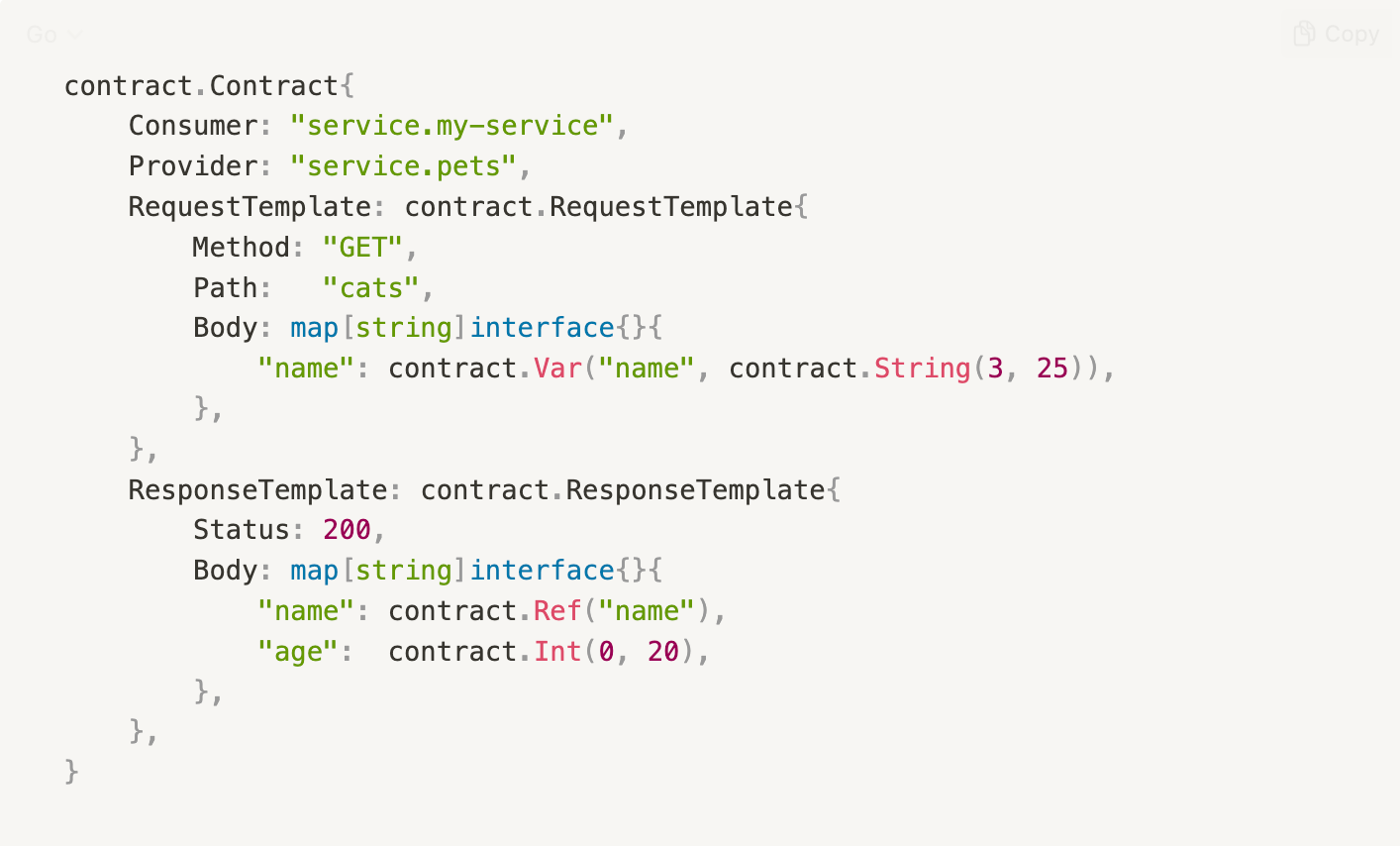

The record and replay workflow allows for very rapid contract generation however it is somewhat limiting because it leads to contracts which match very specific requests and generate very specific responses. Our engineers can refine their recorded contracts to make them more general and dynamic. For example, the snippet below shows a contract which matches:

GETrequests fromservice-my-serviceto the endpointservice.pets/catsRequests where the body has a

namefield which contains a string which is between 3 and 25 characters long

A response to these requests can then be generated which:

Returns an HTTP 200

OKresponseContains a

namefield within the body that equals thenamein the requestContains an

agefield within the body that is a randomly generated integer between 0 and 20

Contract enforcement

The second downside of service virtualisation is that over time a fake may cease to represent reality.

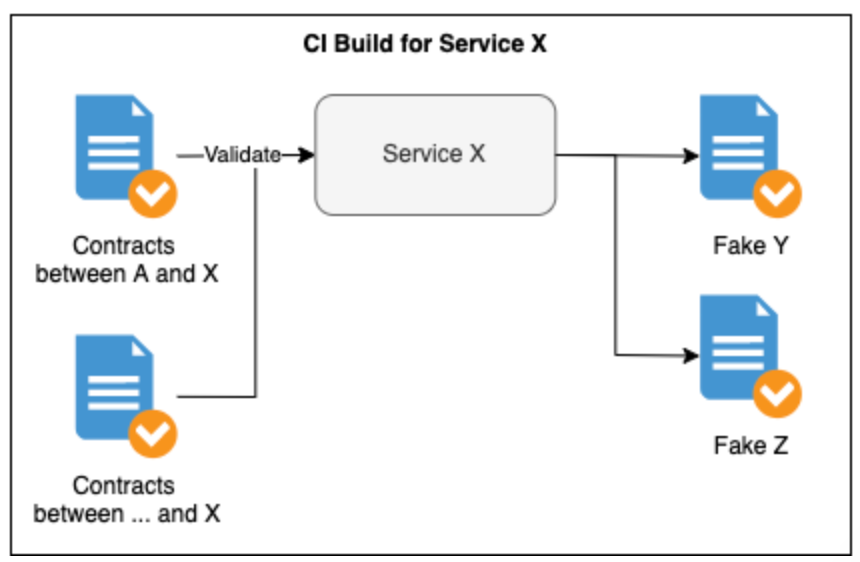

An established technique for dealing with this problem is Consumer Driven Contract Testing (CDC) - this is why we call our fake endpoint definitions contracts. Essentially CDC tests replay contracts against the real endpoints which they represent to ensure they remain valid over time.

Our fake endpoint definitions are quite literally contracts between consumer and provider services. They allow consumers to express that they use an endpoint in a certain way and expect the response to remain consistent. If a provider introduces a change which breaks this contract then we can prevent it from being deployed. At this point the provider can either be corrected or a conversation can be had between the consuming and providing teams so that the change can be applied safely.

One important nuance here is that contracts can be broken due to changes which on the surface don’t appear to be traditional breaking changes. For instance, providers may not be aware that consumers are reliant upon the formatting of certain fields. A consumer may expect that the sort codes it receives from some provider always contain hyphens (e.g.12-34-56) so a change to this format is breaking for them.

Another important point is that contracts should encode the minimum consumer requirement. If for example we created a contract which expected a provider to return a field called sort-code with a specifically formatted value but the consumer didn’t actually pay attention to this field then it should not be included in the contract. If changes to this field won’t negatively affect the consumer then there is no sense in binding to it.

Rollout

We’re really excited about this new tooling and so far feedback on it has been very encouraging. It’s important to note though that we’re still very early in this journey. We are currently rolling this out across the engineering organisation and are yet to put CDC into production.

As we move forward we will no doubt discover limitations with our new approach and our practices and tooling will continue to evolve. If any of this sounds interesting to you, why not take a look at our current job openings!