We have a lot of different secret information at Monzo, ranging from the keys that we use to sign your Mastercard transactions, to the credentials for external services we rely on. Most companies have to handle secrets of some kind. And it's really important that this information moves around our platform safely, as it'd be very valuable to attackers looking to steal money or data from Monzo customers!

In the Security team at Monzo, we've been working on a new system to manage secret information, that makes it easier for engineers to work with secrets in a way that's secure, and makes it even harder for attackers to steal information that'd put you at risk.

Over the last month, we've identified the problems with our existing setup, designed our ideal solution, and implemented it. Now, we're a lot more confident in our controls, and engineers are more comfortable working with secrets.

How our platform works

The Monzo app is powered by lots of software. This software handles everything from making payments to generating the list of transactions you can see in your app. We run this software on a bunch of computers in the cloud (a datacentre for rent), and we call the combination of software and computers our platform.

Here's an overview:

We run about 1,100 microservices written in Go. Microservices are small programs that communicate together to power our app and interact with external systems.

We run Kubernetes, a tool for managing lots of software in a scalable way. We manage Kubernetes ourselves, including etcd, which Kubernetes uses to store configuration and data.

To store data, we use Apache Cassandra, an open source database.

We use Kafka and NSQ, pieces of software that help us queue messages to process.

And we use Typhon, our microservice framework, to handle RPC communication between services.

Read our post for a detailed explanation of how our platform works.

We were distributing our secrets using Kubernetes and Hashicorp Vault

In the past we've stored a fair amount of lower-security secret information – secrets that wouldn't cause much harm if they were stolen – in Kubernetes secrets. This lets us store information securely in a way that isn't accessible by everything on our network.

Kubernetes stores the data in plaintext in etcd, a database where it stores all configuration data. It gives the secrets to applications running on our servers as a file.

We've known for a while that Kubernetes' secrets feature has some problems, which we'll detail later on. So for a handful of highly secure secrets – secrets that would cause significant harm if they were to leak – we've been using Hashicorp Vault. Vault is a popular application for managing cryptography (mathematical processes for hiding information) and secret information. At Monzo, only a handful of engineers have access to Vault, and they tightly control who else can use it.

We needed to improve the way we were distributing our secrets

In the Security team, we're constantly reassessing our weaknesses. What worked when we were a small startup becomes less acceptable as we grow, especially as Monzo becomes an increasingly attractive target to hackers because we have more customers, hold larger balances, and become better known.

We decided that our secret distribution mechanisms weren't secure enough for Monzo today. These were some of the issues:

1. Having two mechanisms created confusion, but neither one was perfect

It's not ideal that we had two secret distribution mechanisms: it means more information for engineers to forget! Ideally we'd choose a good solution and use it everywhere. But even though it's a lot more secure, we weren't using Vault that often because it's a little tricky to work with (and mistakes can lead to outages). Most engineers didn't know how to use it at all, which meant we used it even less! Having two mechanisms also means it would be unclear which one's right for a given secret.

2. The way we were using Kubernetes secrets didn't work well

Using Kubernetes secrets wasn't as easy or secure as it could be.

The secrets were readable to anyone with access to our etcd nodes. Getting into these nodes is really hard, so we didn't consider this a big problem in the past, but we now have engineering capacity to improve this further.

Creating secrets and adding them to your service requires messing around with Kubernetes configuration files. Unlike deployments, we don't have any nice tooling for this.

Reading them into your service is a little fiddly, and we were lacking libraries to standardise it.

3. The way we were using Vault also had issues

It was impossible to configure or even inspect without a key ceremony (where a few engineers who have access to Vault get together and configure it). A key ceremony takes about three hours of work.

Writing new secrets is basically a safe operation: you can't really do anything malicious by writing new secrets. But even this needed a key ceremony, which was time consuming and unnecessary.

We didn't have any ways to configure Vault using code, so were using ad-hoc scripts, which takes time and is prone to errors.

To let a service read from Vault, you'd have to generate a secret key for that service, which we'd then manually store as Kubernetes secret. Every time you create a Vault secret for a new service, you also have to create a Kubernetes secret! This chicken-and-egg problem creates more work and adds complexity.

So we came up with our dream mechanism for secret distribution

To address these issues, we enumerated requirements for our dream secret distribution mechanism:

1. Secrets are encrypted wherever they're stored

This means that if you literally went into a datacentre and stole our servers, you wouldn't get any useful information.

2. It's impossible to read all the secrets at once, but easy to let services read specific secrets

This keeps it easy for engineers to work with secrets, but hard for attackers to steal them all at once.

3. It's easy to add brand new secrets, and hard to overwrite existing ones.

Secret substitution (replacing an existing key with one you control) can be dangerous, but writing new secrets is a lot safer.

4. It's easy to let specific applications read secrets, without a chicken and egg problem.

5. It's auditable.

We can easily track the actions of humans and computers.

6. It's inspectable.

You can view its configuration and what secrets exist.

We soon determined that Vault, if we configured it more sensibly, could cover all our use cases.

To make maintaining a complex Vault configuration easier, we decided to specify it as code using Terraform.

We configured Vault more sensibly to suit our needs

Authenticating to Vault

To make sure we can access secret information securely, but without having to create new secrets that add unnecessary complexity, we decided to use its Kubernetes auth mechanism. This lets Kubernetes applications present their service account token (like a password, attached by default to all applications in Kubernetes) to Vault. Vault will then issue its own token with permissions that are configured for that application. In our case, we chose to let all applications get a token with a default Vault policy defining what actions are allowed. We called this policy kubernetes-reader - more on that later.

Service account tokens are Kubernetes secrets. This means they're still not as secure as we'd like, because they're stored unencrypted in etcd. But, given that the Kubernetes secret is just a token which allows access to Vault, this reduces how dangerous it is to access Kubernetes secrets; you'd now need to talk to Vault as well, which means that you'd need an active presence inside our network. In our old approach, it would be dangerous if an attacker got access to etcd, and they wouldn't need to access anything else.

We also decided that we could improve the security of Kubernetes secrets by encrypting them at rest using an experimental Kubernetes feature. We generated an AES key (a very strong key for encrypting data fast), stored inside Vault, which we use to encrypt and decrypt Kubernetes secrets on the fly; the encrypted version is stored in etcd, and the unencrypted version only ever exists in the service that uses it.

We wanted to avoid the issue of having to create a second Kubernetes secret for every new Vault secret, reducing complexity for our engineers. Service account tokens mostly avoid this chicken-and-egg problem, because Kubernetes handles creating them distributing them to services for us in a very convenient way.

Human interactions with Vault

We have an internal authentication mechanism which allow staff members to prove their identity, and perform various actions, like replacing a card for a customer. It's really important that we're able to use these mechanisms to ensure that people can only do what they need to do, to protect our customers from someone in control of a staff member's account. To allow most engineers access to Vault, it was important that we went through our normal authentication.

We soon intend to do this via a custom Vault auth plugin, which is a little safer and easier to work with, but to save time during this project, we instead used a web service in front of Vault which intercepts its requests. We gave this service's service account permission to use a web-user Vault policy which allowed all the required human actions, and permission to issue 'child' tokens. Any authenticated engineer connecting through the proxy has a child token transparently inserted into their requests to Vault. The token is linked to the engineer's user ID, which means that we can identify individuals in the audit logs.

This mechanism lets us present the Vault web interface, for simple inspection of Vault, but it also lets us write tooling that interacts with Vault. This tooling can obtain a Monzo access token as usual, and use it to make requests to Vault.

We then wrote a tool, shipper secret, which allowed engineers to create new secrets (in our traditional emoji-heavy way):

![$ shipper secret service.emoji --create ⇢ Authenticating against s101 ? Please enter a descriptive name, eg 'aws/secret-access-key': canary ? Version identifier: 2019-05-11 🔑 Your secret will be at path secret/k8s/default/s-emoji/canary/2019-05-11 under the key 'data' 🔍 Existing versions for canary in s101: [2019-05-10] ? 👉 Confirm when ready to paste secret Yes ? ↔️ Do you want to trim the trailing newline in your file? Yes ? 🆕 Write secret/k8s/default/s-emoji/canary/2019-05-11 to environment s101? Yes 🔑 Secret created at secret/k8s/default/s-emoji/canary/2019-05-11 in s101](https://images.ctfassets.net/ro61k101ee59/JuIjSUg6ATWiGDhV1nS8g/8ee3b169bee58c7a549c50c39af47b41/Screen_Shot_2019-07-26_at_14.59.05.png?w=1280&q=90)

This tool enforces a few style conventions that we designed to make Vault easier to deal with:

We treat secrets as immutable, which means we can easily see a history of any changes to the code.

We treat the last section of the path as a version identifier, which means we create a new version of the secret every time we make changes to it. This gives us a clear and convenient history of any changes in the application code. It also means we don't have to worry about handling secret updates in our applications; a secret named a certain way will never change. A secret will only change if you specifically request one of a new name.

We check that a secret exists in our staging environment (

s101) when writing to prod, and warn if it doesn't.This encourages engineers to keep things consistent and test our application code end to end.

We always write the secrets under the key

data, even though Vault secrets can contain many keys and values.We've found that having multiple keys in one secret can lead to complexity, because there's no way to determine which keys exist without reading the entire secret. So we encourage engineers to create multiple secrets in the same 'folder' instead, e.g.

aws/secret-access-keyandaws/access-key-id.

Computer interactions with Vault

We designed the default kubernetes-reader policy to allow applications to do a variety of safe actions. As manually configuring Vault requires a key ceremony, we wanted to cover as many use cases as possible for a service by default. Fortunately, Vault has a very powerful policy templating mechanism which lets you do something like this:

![# allow reading of secrets like secret/k8s/default/s-emoji/canary/2019-05-11 path "secret/k8s/{{identity.entity.aliases.kubernetes.metadata.service_account_namespace}}/{{identity.entity.aliases.kubernetes.metadata.service_account_name}}/*" { capabilities = ["read", "list"] }](https://images.ctfassets.net/ro61k101ee59/Pz4xptk9sSXX9kAzSFCnl/427cc0427f05e17a5f270e3ce58d96fb/Screen_Shot_2019-07-26_at_14.59.17.png?w=1280&q=90)

Essentially, Vault allows you to interpolate the details of the authenticated user (eg their service account name) into the policy automatically, including into the allowed paths. This means that we can give every service a special path in Vault that they can read by default. In combination with the fact that all engineers can now create new secrets in Vault, we have already solved the problem of allowing engineers to create secrets for their services.

Libraries

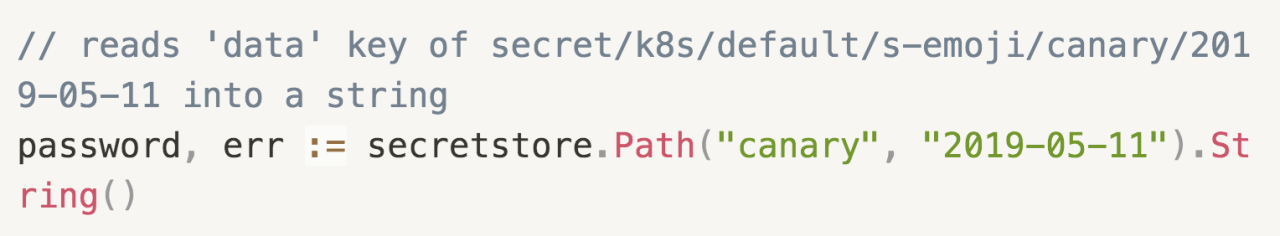

We wrote some new library code to make it super easy for engineers to read secrets into their services. Our tooling encourages engineers to only have one key in their Vault secrets (which are actually each a key-value store), named data. Our policies mean that secrets for s-emoji in namespace default are at path secret/k8s/default/s-emoji/... Our library code takes advantage of these standards, allowing an interface like:

This interface is really similar to the way we read configuration variables, so it was intuitive to our engineers.

What's next?

We're continuing to make a big bet on Vault as the future of handling secure information at Monzo. We have a few projects in mind:

We'd like to get Vault to issue short lived credentials for our services which further restrict their access to Cassandra, our database, so we have more guarantees that an attacker couldn't write to the database.

It would also be great to issue AWS credentials out of Vault, and keep them updated. Right now we store these as static secrets in Vault, so they never change. In security we like to rotate things so that if they are ever stolen, they aren't useful for long.

We're interested in ways to scale Vault and make sure it's able to handle all our demands, even as our customer base grows, and we use it for more tasks.

If you found this interesting and want to work on projects like it, we're hiring! We're looking in lots of areas including defensive and offensive security, and we're remote-friendly!